Epic 2018 day 2 conference notes

Design & Data

What does a data expert see when they look at a design problem? This panel will immerse us in the practices of two data experts, both of whom have collaborated with ethnographers, as they navigate through design challenges in different ways. Chair Jeanette Blomberg will draw the panelists and audience into conversation about synergies and challenges for interdisciplinary design collaborations.

Thomas Y Lee

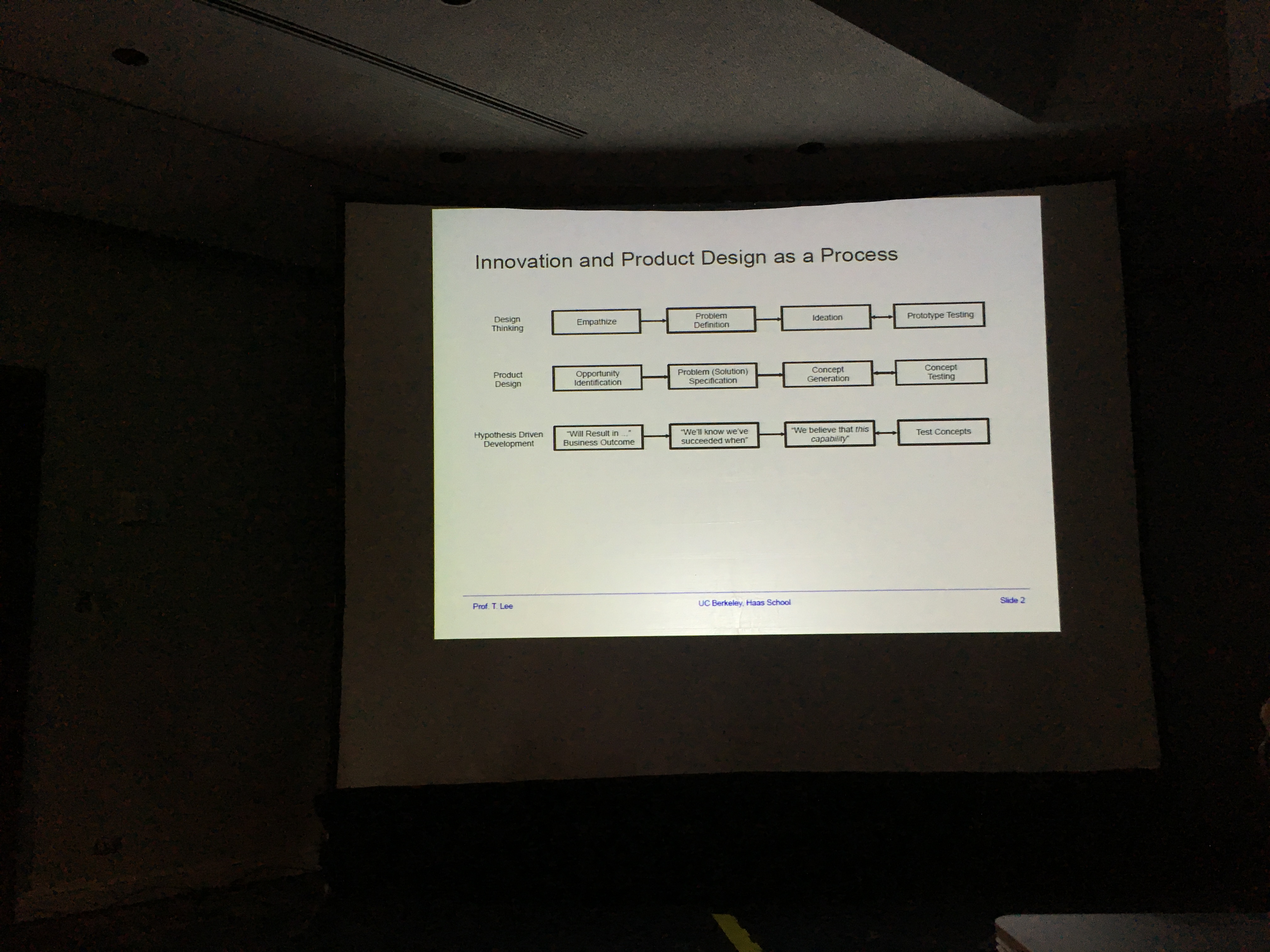

Introducing innovation and product design as a process

Whether it’s design thinking, product design or hypotehsis dirven devleopment, at a high level the process is fundamentally the same.

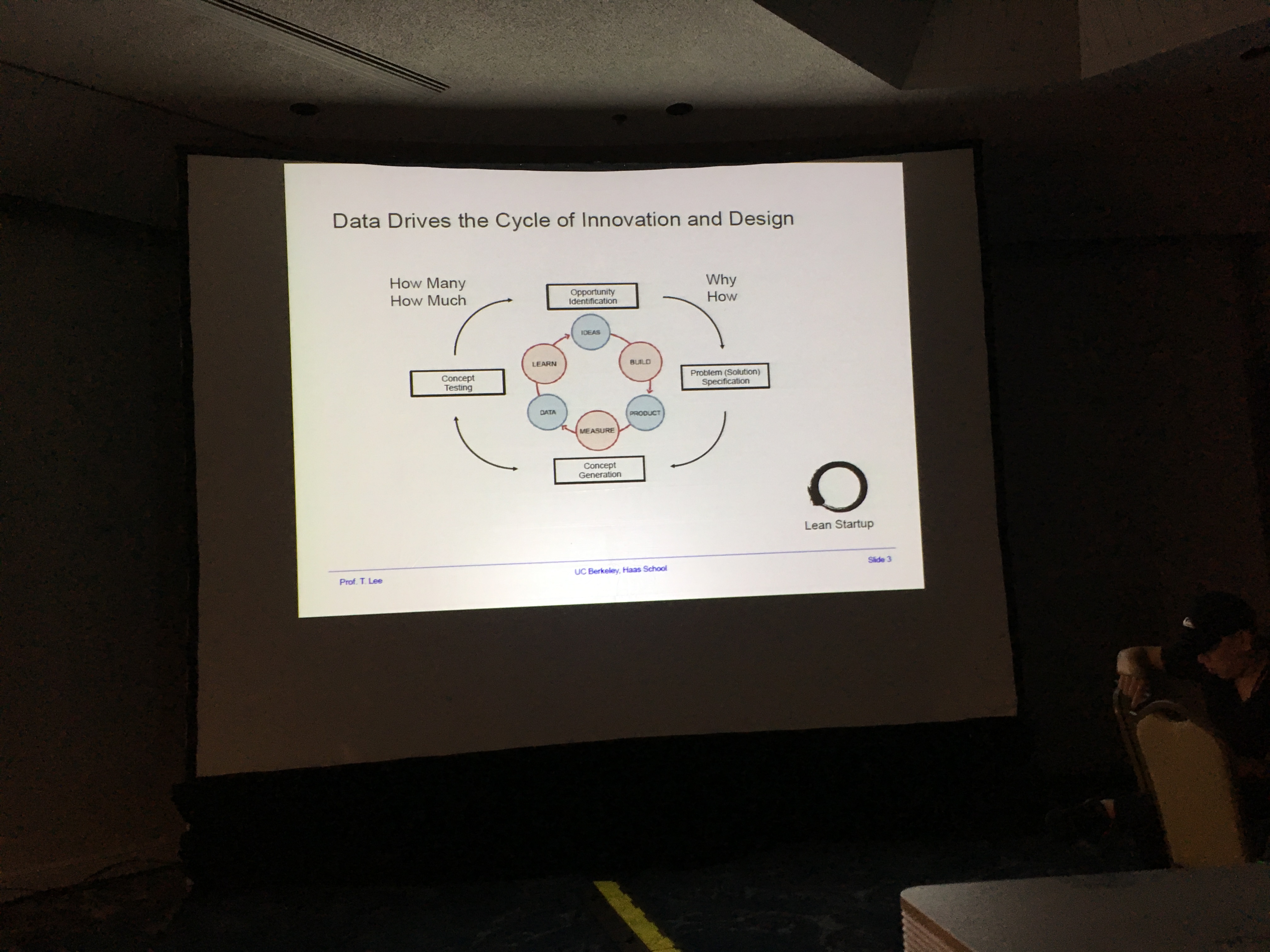

Rather than thinking of this as a linear or a stage-gate process, in practice it is often circular and contiuous

So, the question becomes - what is the role of data in this cycle?

Data has long had a role but traditionally this is afterwards - A/B testing, pre/post, etc. In all of these different frameworks, data fundamentally answers questions of how many or how much.

Textual/sentiment analysis for example use word baskets, which are ultimately just counting words.

In order to develop an intimate understanding of a problem, we really need to ask uestions about ‘why’

So, the question is:

“How can we apply processes related to data to begin to answer questions about why and how?”

Example / story:

Ace hardware store in SF. goes in and buys gloves, sweep, spray hose and clorox. Soon as I put items on counter, check-out person said: ‘oh you’re cleaning your compost bin’

Can we identify customer intent based on items purchased?

Customers aren’t buying a 1/4 inch drill bit, they’re intereste din a 1/4 inch hole. But push it further - they want to hang a picutre/secure bookcase, etc.

Does domain knowledge (recipes or known projects) improve our abaility to identify projects?

going further: Does contextual knowledge (customer demographics) improve our ability to identify projects?

Second example/story: emergency services wait times. 2 hours.

In emergency services, wait time is an inverse function: the sicker you are the shorter you wait.

We all know we need to wait, but what can we do about it? Because at the limit, people can die.

A traditional researcher would look at this and says, oh this is a non-pre-emptive priority queue. I’m going to map this thing out, I’m going to identify the bottlenecks in the process, and design a service impreovement to remove the bottleneck.

Instead of doing this, we said: traditional process design doesn’t apply to healthcare, because no patient is a widget. Every patient is unique. So, build sequence algorithm to track each patient, and ultimately try to find a discrimation value and pushes it upstream. Point of care testing - tests that can be performed at the bedside. If you can convert tests to the bedside, recognising tradeoffs in cost vs efficiency, which would you do it for?

Big ideas:

Explore ‘why’ and ‘how’ with machine-generated data in additional ‘how many’ and ‘how much’

Approach the analysis of machine-generated data as a complementary, not competing approach

- start with hypothese (semi-supervied methods)

- generate hypotheses (eg. narrow the scope of analysis)

Analyse: “What people do and how systems perform” and not just “What people say”

How can I use data to generate hyphotheses to then turn around and use ethnography to really look into the right areas?

Marc Böhlen

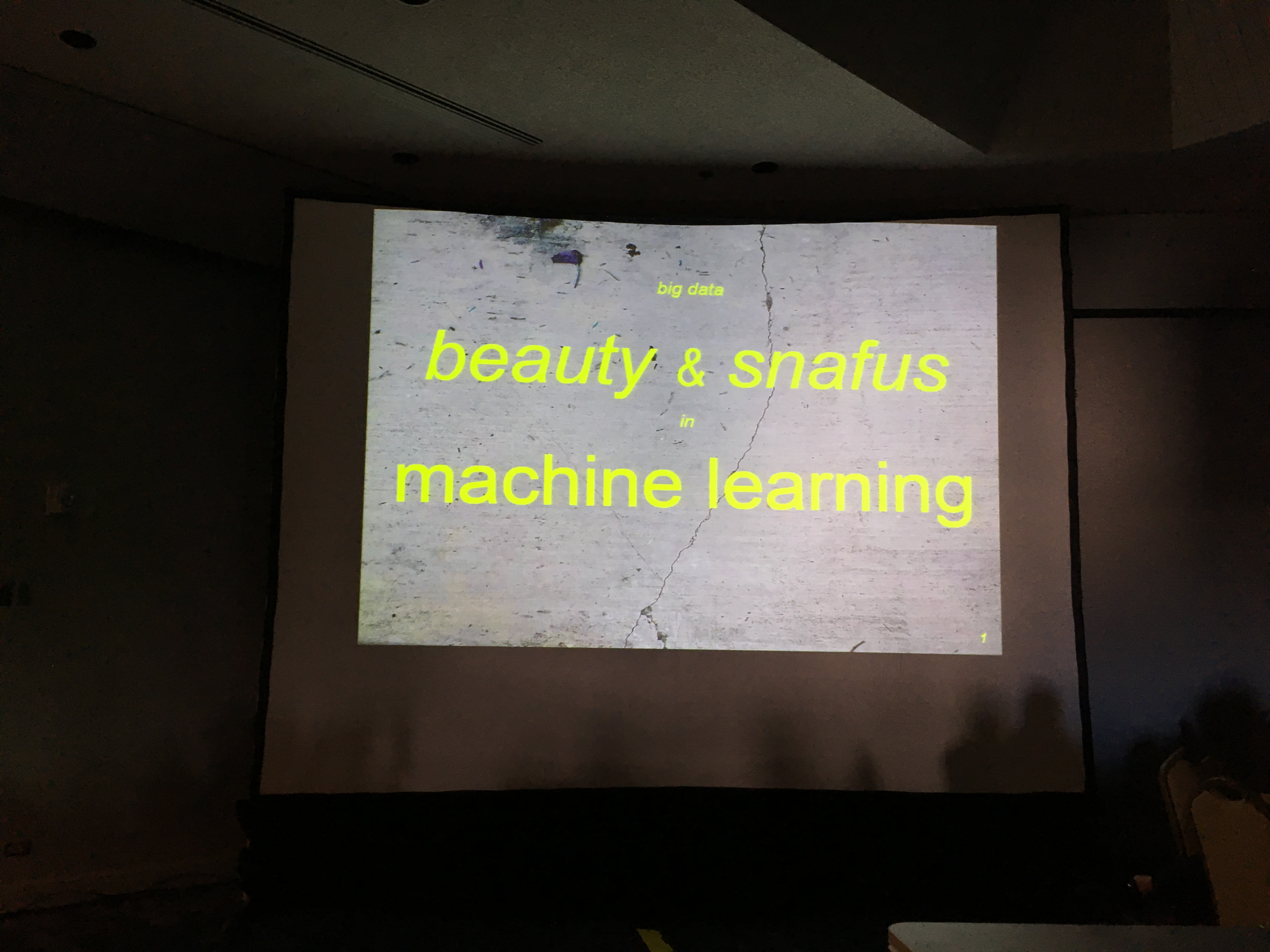

Can machine learning algorithms detect beauty?

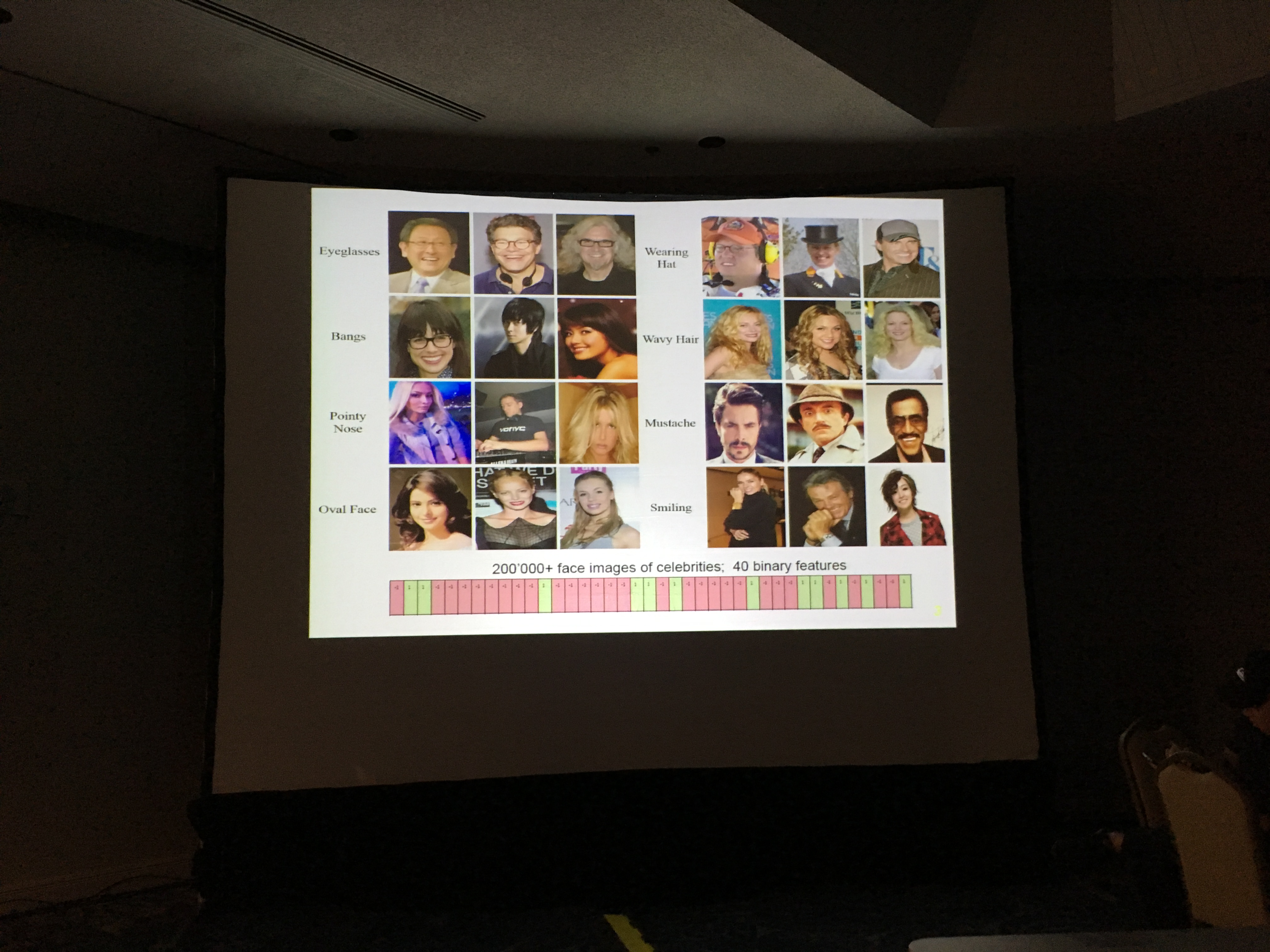

If we try to answer this question in the affirmative, we need an approach and some data. But data is hard to get and hard to make. So, borrow some data: CelebA data set from University of Hong Kong. 200k face images of celebrities; 40 binary features

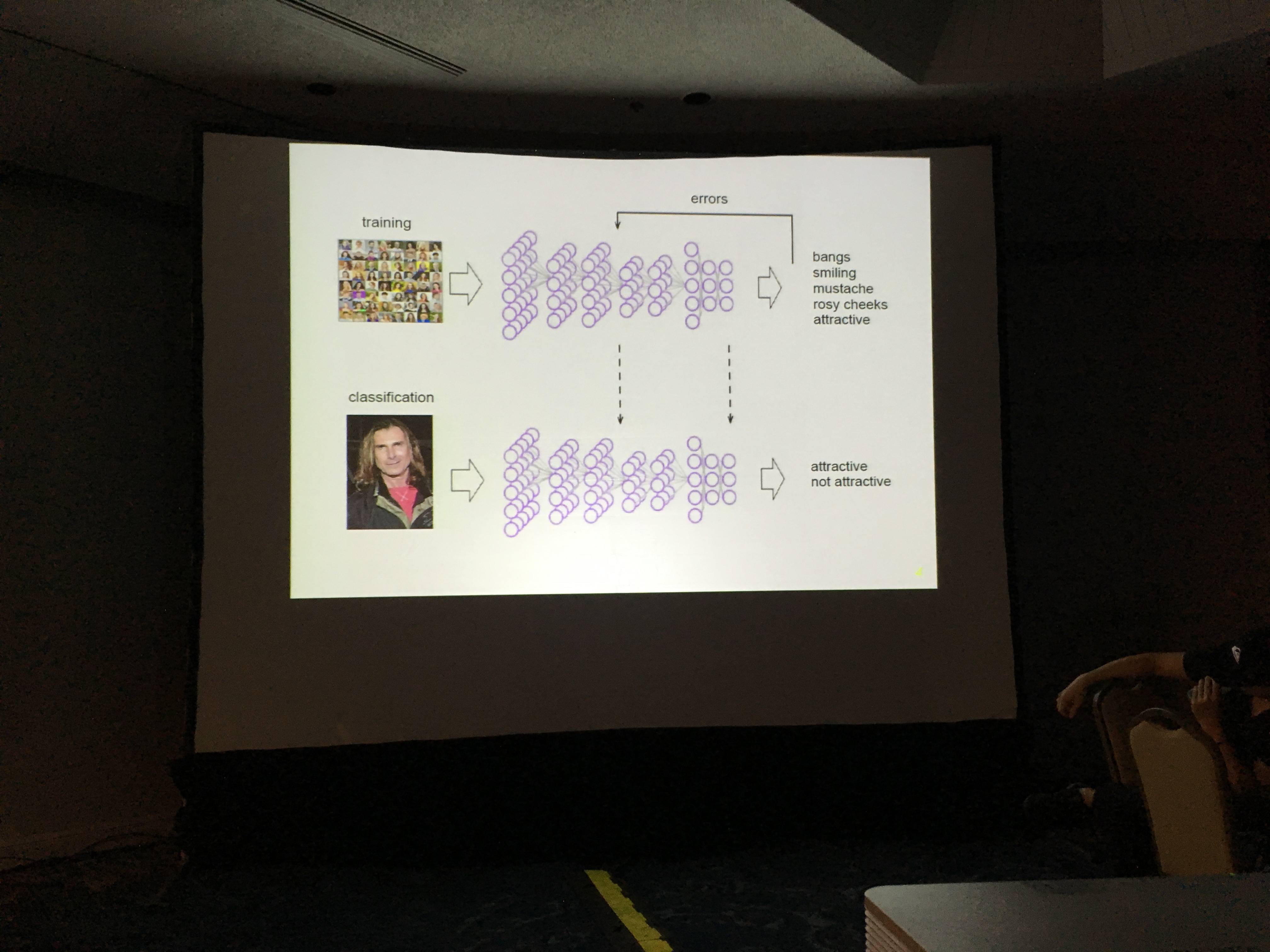

What about approach? The current paradigm is deep learning. i.e. convolutional neural network for image classification.

3 steps:

-

Data collection - need to be large amounts and high quality. Labels have to be correct and dimensions flattened to fit into framework

-

Training.

-

Test it. introduce a new image and see what it classifies it.

So question is: What’s difference between testing data and training data? How can you get something that’s precise and can be generalised.

Process raises many questions: From network perspective, when do you use a complex framework and when do you use a simple one? From a data perspective, what do you do with noise? i.e. false negatives/positives. Machine learning algorithms are supposed to be immune to that because of generalisation, but we want to

University of Hong Kong hired 50 workers from mainland China to compile that data. 100 binary decisions per hour per worker. No wonder you’re going to have mistakes because it’s a rushed job.

There’s a deeper problem than just the outsourcing of the labour. When you make these data sets, you are making a world. You are deciding what to include and what not to include.

There’s always slippage. These models get made and they get used for somethign slightly different, and it’s hard to track.

Big data training sets problems:

- Outsourcing of labour in data collection and therefore low quality of it.

- Slippage in usage of those data sets.

- Lack of articulation of the assumptions that went into the selection criteria in the first place.

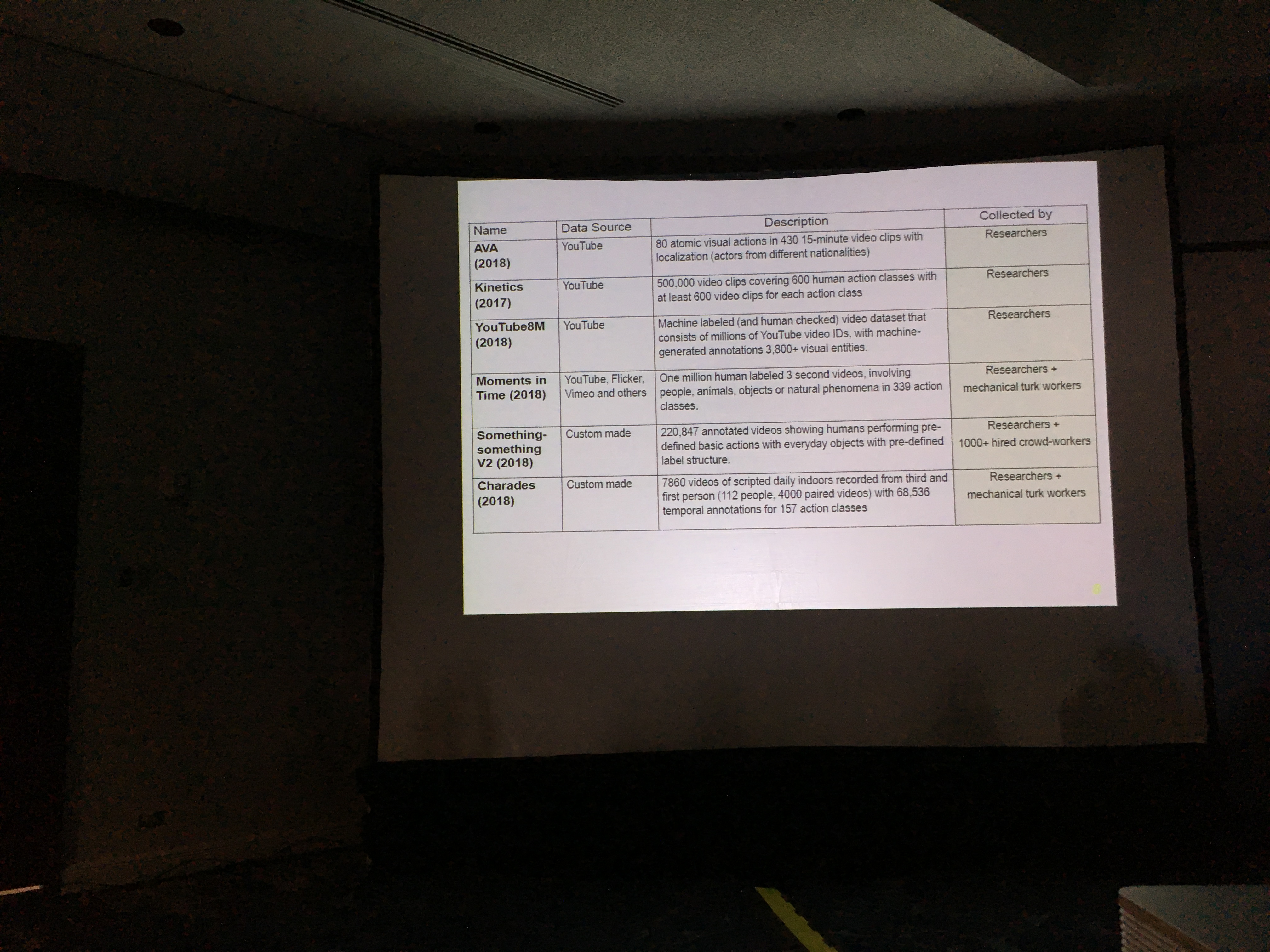

These are the video databases in 2018:

An alterative approach, as an experiment: Action camera footage as the foundation for these databases.

Because these footage comes closest to being ethnographic in nature.

Also this data source is easy to access and make. And now the problems just begin:

- can extract metadata from the feed to say something very specific about activities and

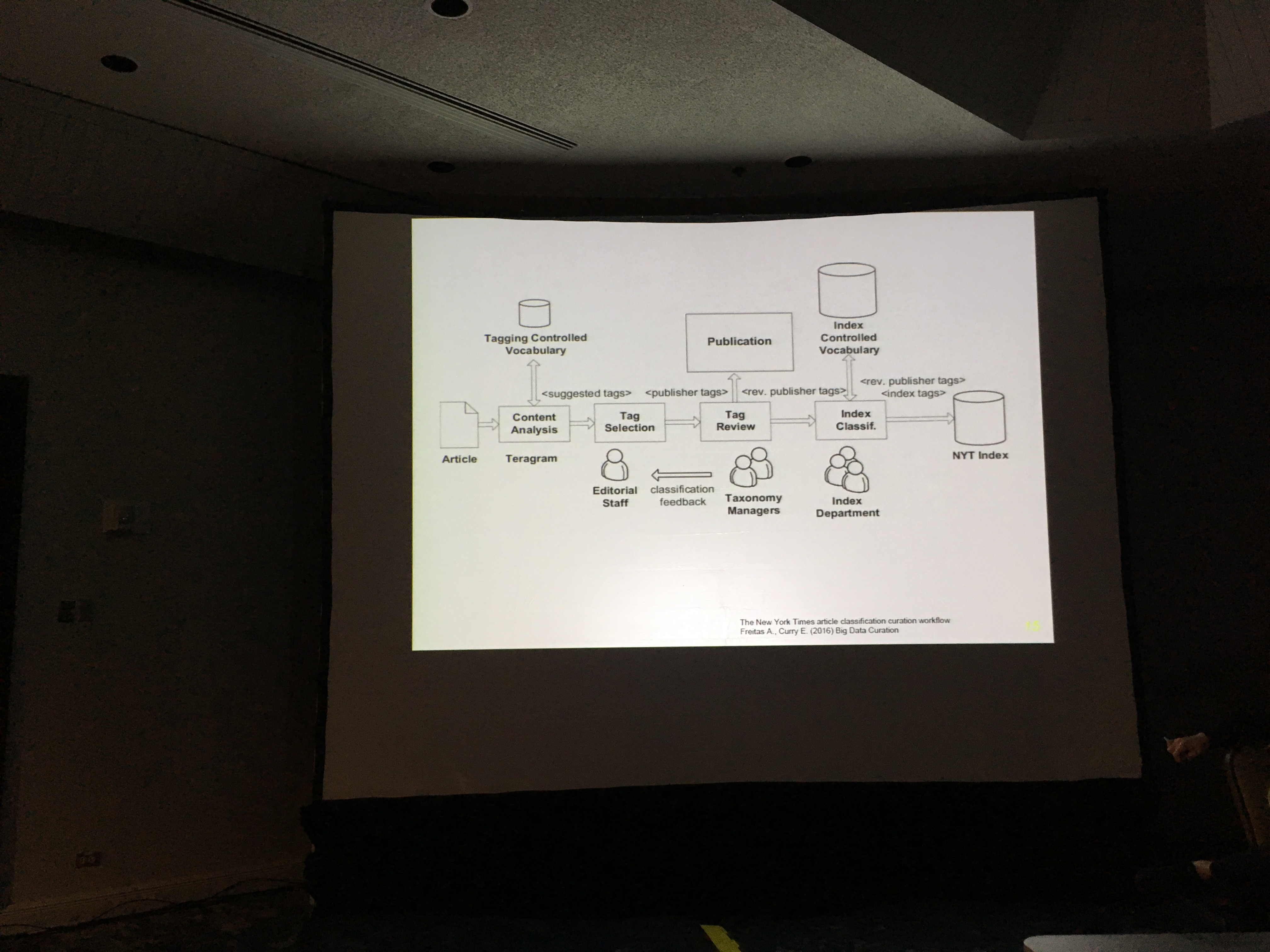

Shows NYT’s artice classification curation workflow [very similar to ours at the FT] - involves humans-in-a-loop with machines.

Q (from Thomas to Marc): If you use a commercial off the shelf tool, it has assumptions and limitation built into it. What advice for people starting out?

A: Hard problem, but intermediate approach is to compare across products. It’s getting The convenience factors is really seductive. If it kinda works but you don’t know why it doesn’t fully work, don’t do it.

Q (from Marc to Thomas): intrigued by the ‘why’ question. How do you separate out the components of why

A: Total quality management has its roots in Edward Demming. In application, as a process, people use the back-of-the envelope heuristics of ‘The 5 Whys’ - this gets you closer to the root causes. What is the user is really interested in. Yes there are other dimensions of ‘why’ but those dimenions are all secondary to the ultimate purpose.

Q (to Tom): Why not just ask the clerk? (in the Ace Hardware example)

A: Machine learning is all about learning from experience, so yes, can ask cleark. But which clerk do I ask? How many should I ask? Now I need to aggregate and reduce.

Q: Class one / Class zero error tradeoff, especially when it comes to why in medical contexts.

A: There isn’t a broad heuristic that works. I started down this field in medical infomatics. I’m skeptical about applying too many automation in medical information. Particularly because context is really important. Even just San Fran vs Philly - in SF you’re worried about meth; in Philly you’re worried about cocaine. Trying to generalise is a fool’s errand in these circumstance because context is so important.

Q: How to organise teams of humans to create high quality data sets? What are good examples?

A: The 2018 crop of video datasets - there’s good work in there. The challenge is - if you want the voice of the world in there, it can’t be just the researchers saying we pick this or that. How do you combine getting authentic, real input with material that’s useful for machine learning which is finicky and fragile and difficult to fit into.

Shifting Power & Agency

Themes: Engaging evidence. How to use ethnography to effect change in a system.

Revitalising Openness at Mozilla: A Mixed Method Research Approach

Rina Jensen (@rinajensen) · Mozilla

Big takeaway: importance of mixed-method research.

Open source is the main competitive advantage for Mozilla. But Mozilla is facing a dilemma. We’re losing market share in a market we cannot compete directly.

History from 2004 (release of Firefox) to today. As Mozilla grew, it became more open source in name than for real.

“We now have a significantly large enough population of folks at Mozilla who don’t have that history and don’t know the history of Mozilla being built by the community.”

Teams were spread out, making it hard to collaborate and learn.

Our dilemma was not just organisational or cultural but existential.

Wanted to revitalise Open, but institutionalised knowledge had been lost.

So, engaged diverse group of researchers. Set forth 3 questions:

-

What is the current state of open collaboration at Mozilla?

-

What has changed and why?

-

What are the other exmpales out there that Mozilla could draw from?

For the first time ever, did a deep-dive data analysis of contributors.

Learnt that contribution was actually growing. But contribution has centralised around 6 projects, and Firefox as not one of those.

The feeling of being disconnected also came down to how the collaboration was happening. We had adopted closed systems - Slack, etc, that required people to sign in.

“What people get frustrated about, what is the thing, is that the thing all of a sudden comes out and look different.”

Diversity has a real impact - other open source projects worked on diversity, whereas Mozilla had never deliberately done so.

Outcomes since 2017 December: Specific projects on diversity, launching 30+ projects to revitalise open source.

Holistic Understanding of Digital Financial Services Users: An Integrative Approach between Applied Anthropological Research and Big Data Analytics

Gisela Davico & Soren Heitmann · International Finance Corporation

Financial inclusion. Work in Sub-Saharan Africa to scale financial services. 57% of adults in Sub-Saharan Africa are still financially excluded.

Getting people to sign up is a challenge, and getting them to use them after signing up is also a challenge.

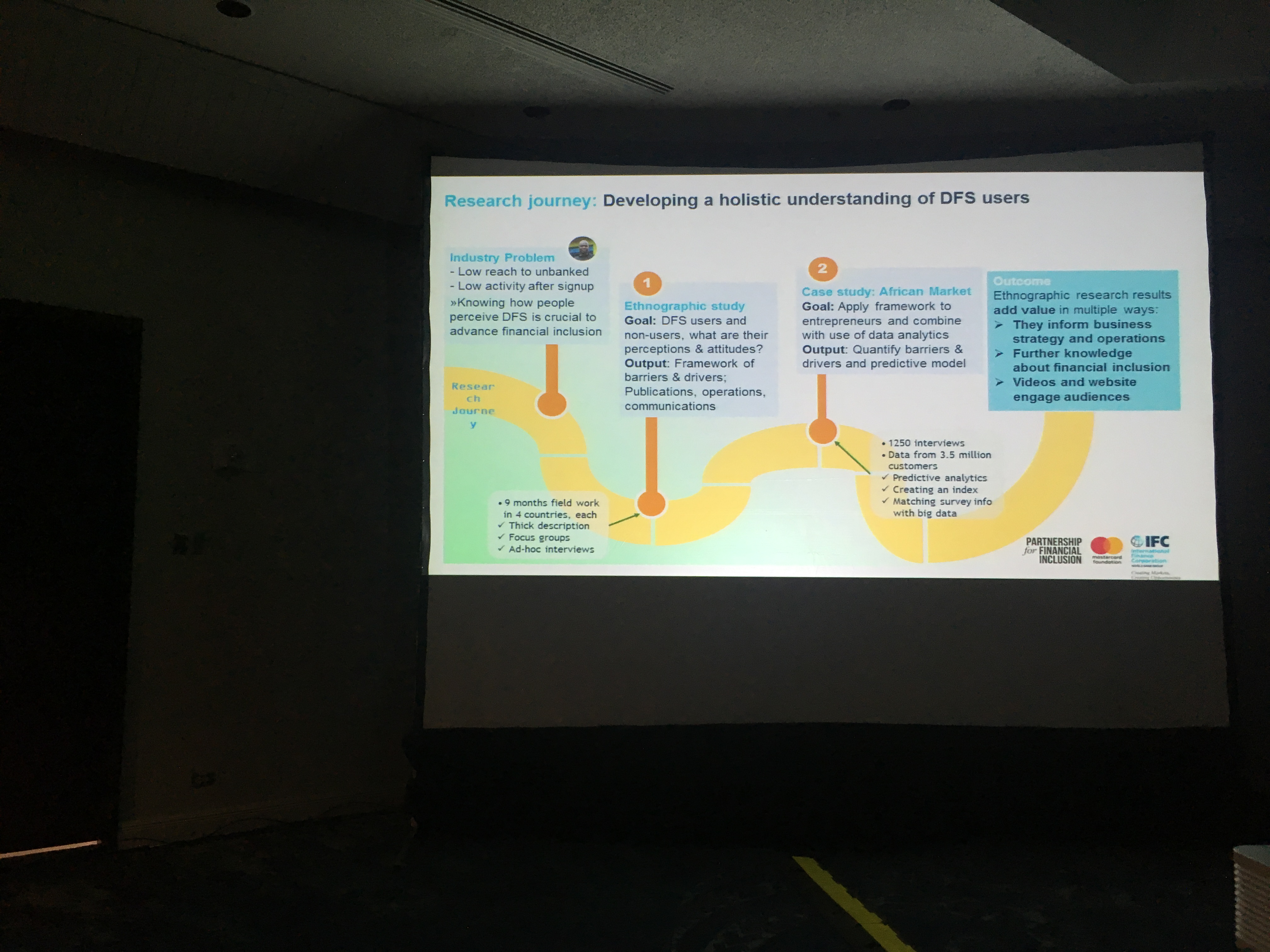

Research journey:

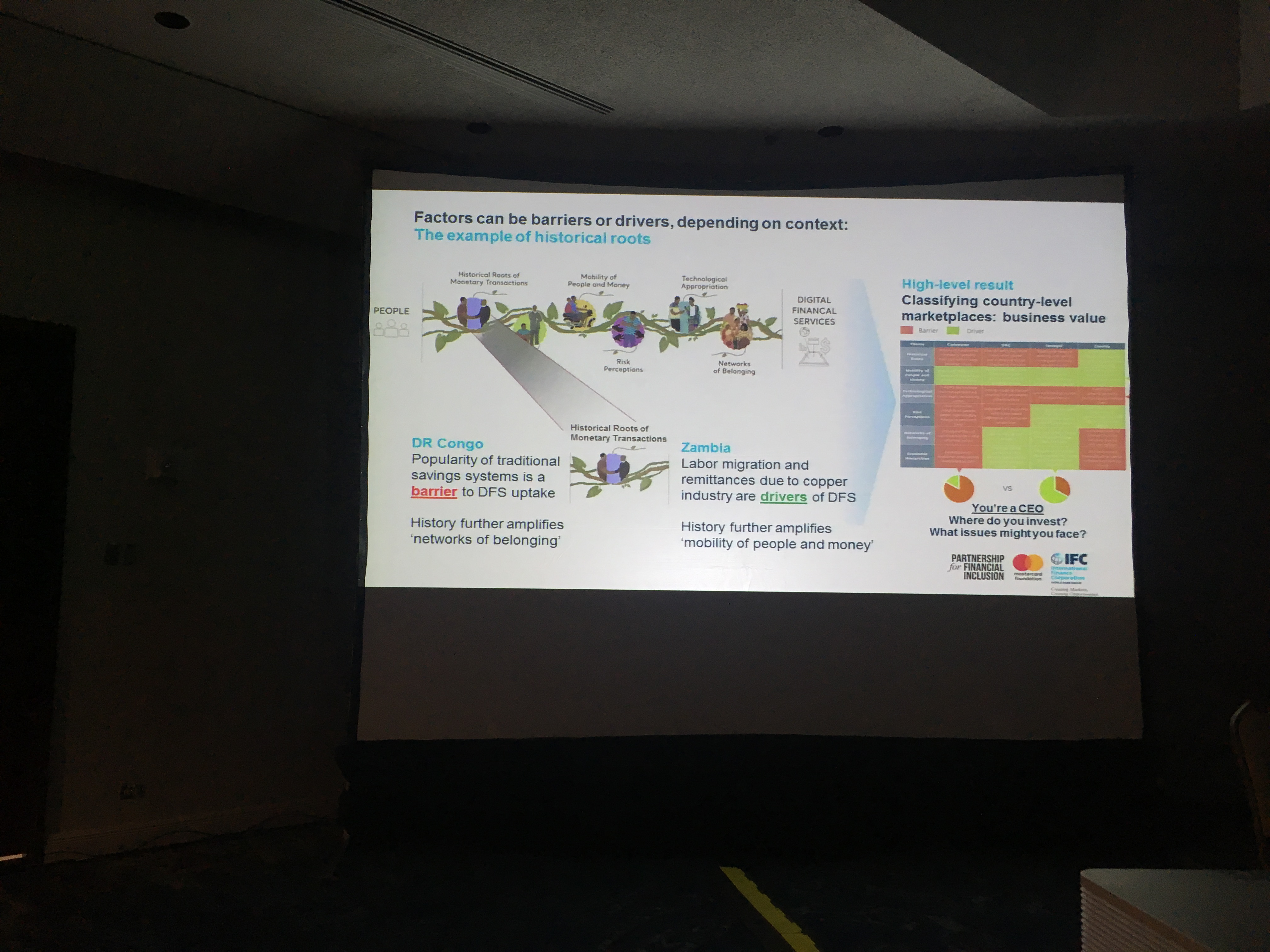

Found 6 factors that affect trust:

-

Historical roots of money transaction

-

Mobility of people and money (i.e. people already have experience with sending money)

-

Economic heirarchies (i.e. banks are not for me or people like me)

-

Risk perceptions (i.e. would my money be safe?)

-

Technological appropriations (i.e. takes away excuses, makes people interact in new ways)

-

Networks of belonging (i.e. I don’t need the bank - I have informal social networks that give me what I need already)

How these six factors can be used:

Also - they made a game to convey the results!

Challenges of the project: mainly around project management.

Gaming Evidence: Power, Storytelling and the “Colonial Moment” in a Chicago Systems Change Project

Nathan Heintz · Roller Strategies

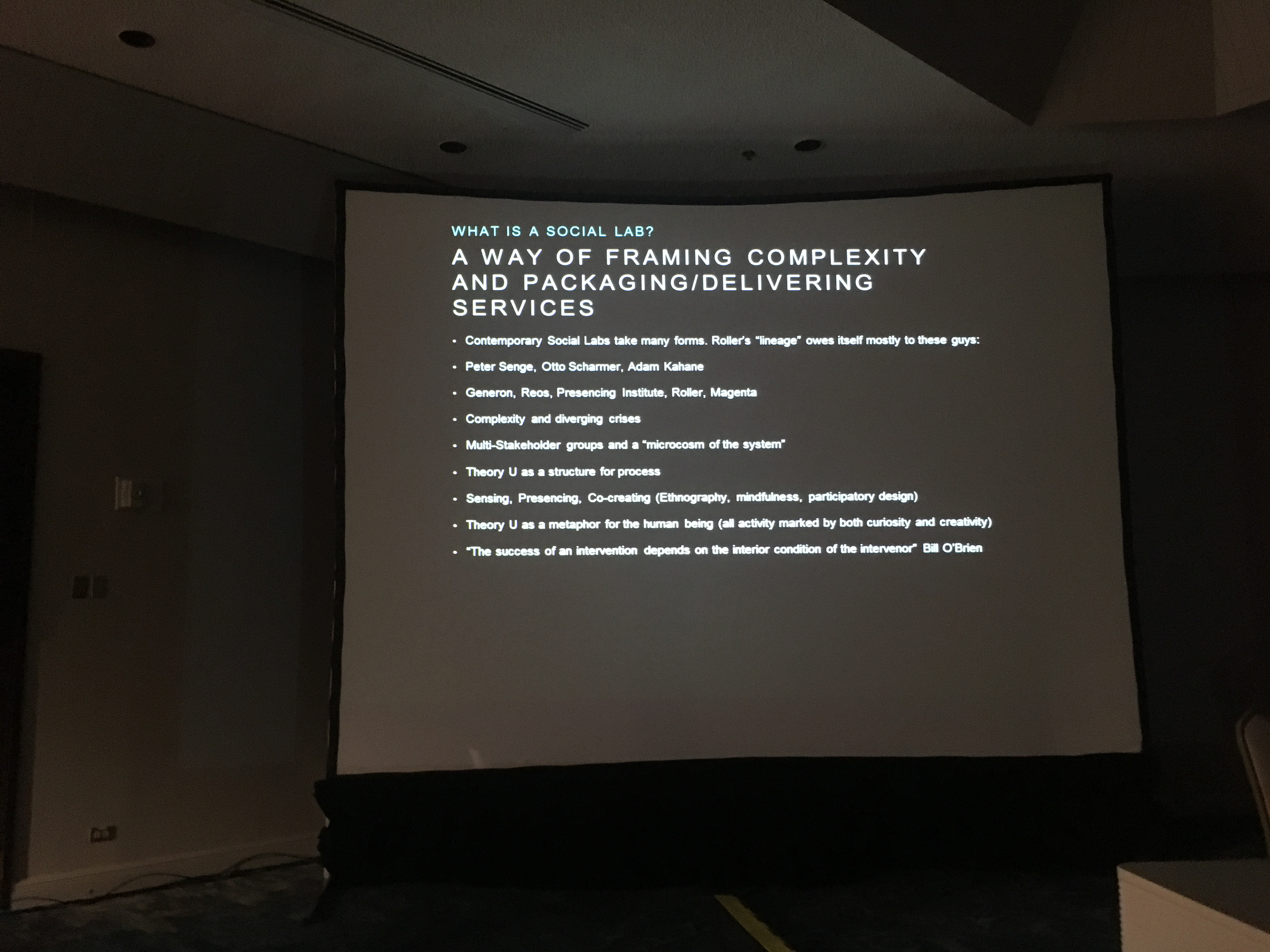

Social Lab: diverse participants, aims to have a systemic impact.

Uses mixed methods to frame complexity and package/deliver services:

There’s a north/south divide to the project: “Chiraq vs The Loop” (“Third World” vs Moneyed Institutions). Also an insider / outsider divide.

This created contested narratives, i.e.:

-

Chicago is a place where people get shot.

-

Chicago is a place of ruthless political culture.

This creates lots of questions around ownership - who gets to define impact, who is this project for, etc

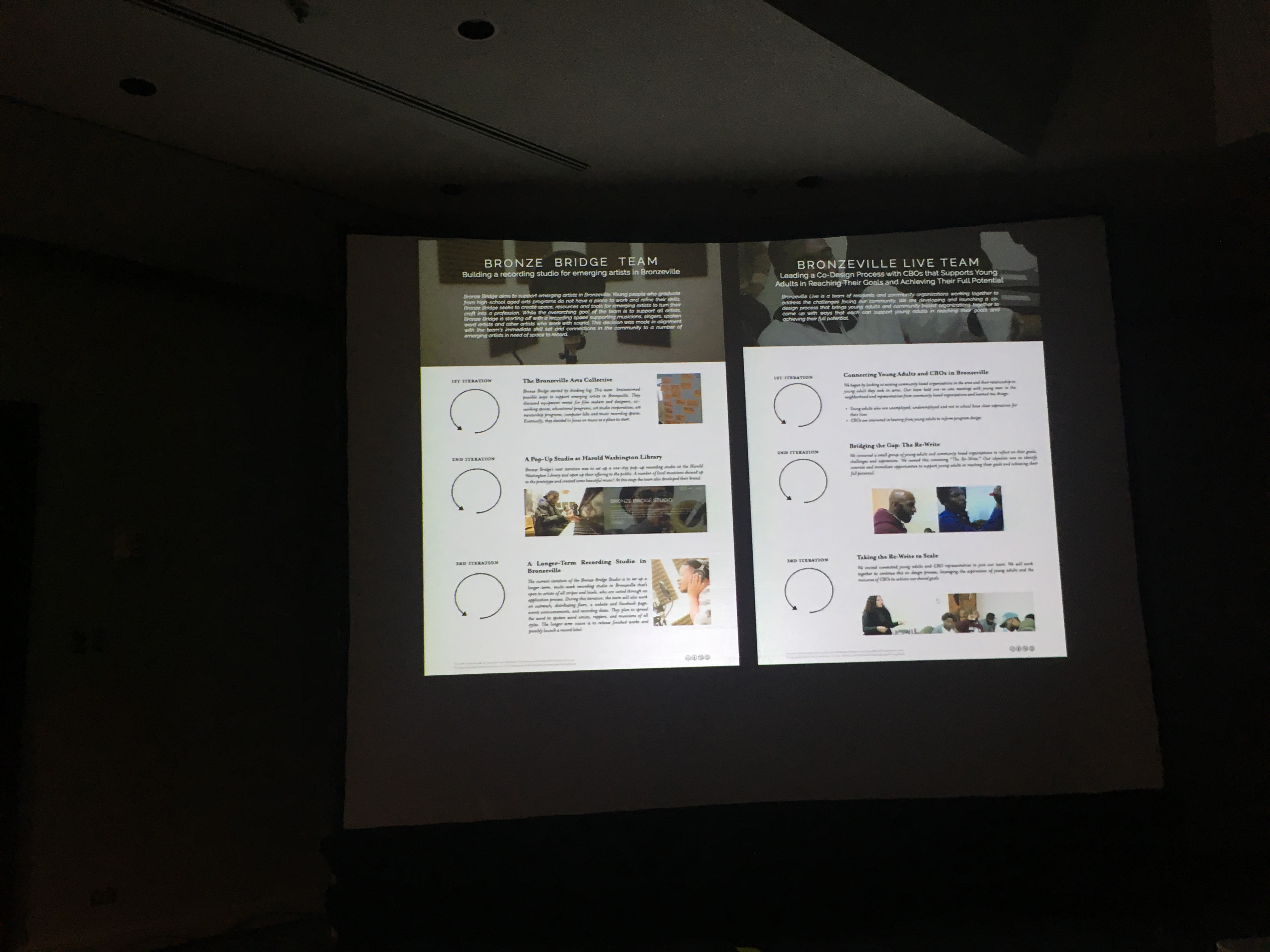

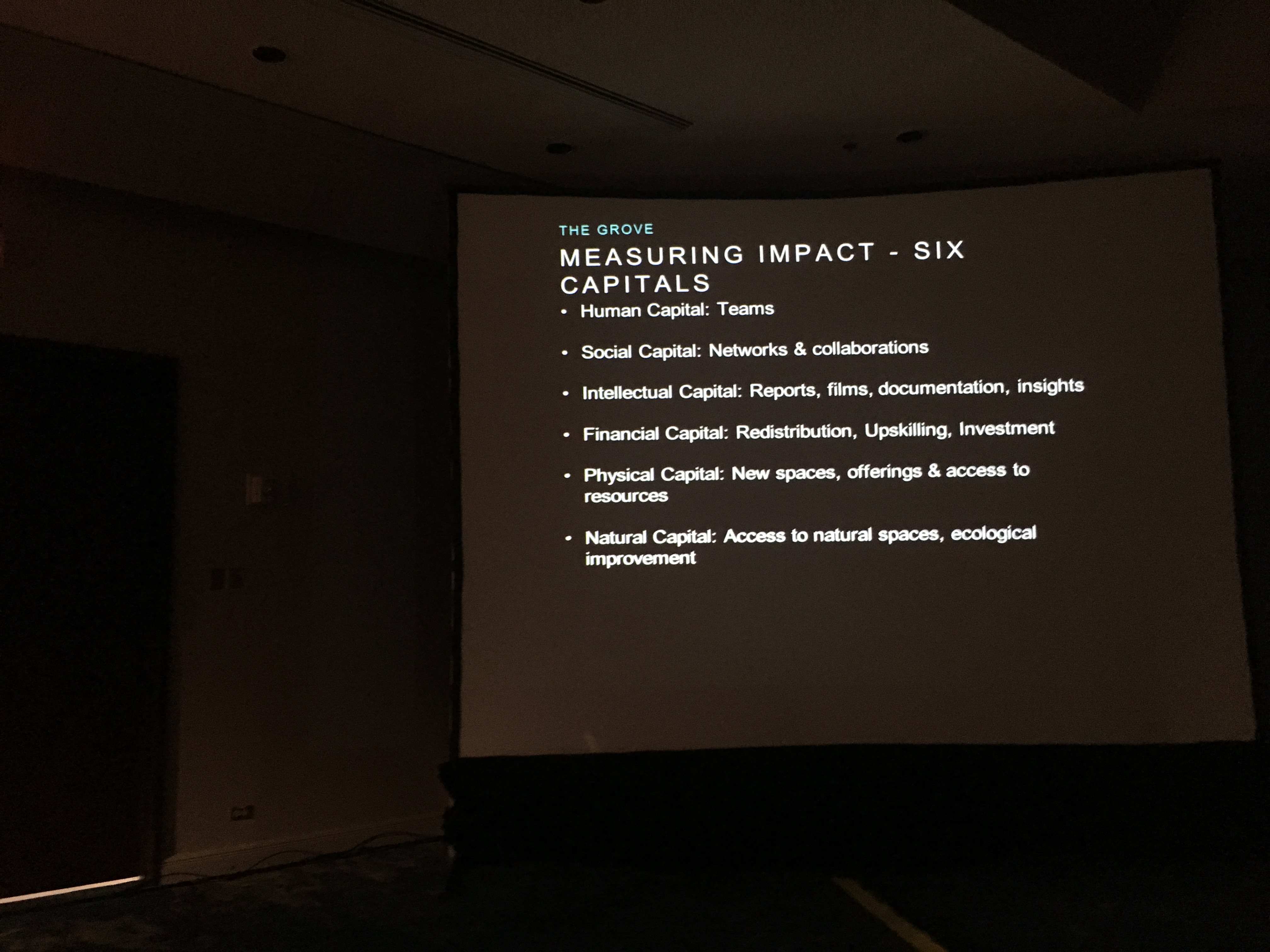

Project called The Grove: How can we work together to support young people in Chicago to develop resilient livelihoods?

Place matters - originally was in a gallery in a different place. Later moved back to Bronzeville

Even naming matters:

We had some difficulties around trust and the pace of work: the pace of business vs the pace of social change.

Another example of shifting thinking: ‘scarcity mentality’ (do what we can with resources we have) vs. Business case thinking (figure out how much it’ll cost to do what they want to do, and ask for the funding needed to do it)

Most projects went through several iterations and evolved over time:

Measured impact across six dimensions:

Embodied Perspectives

Working With Intuition

Anish Nangia (@oddclaw) · eBay Inc.

What is not evidence? researchers might answer: ‘intuition’

started life as electrical engineer. Felt engineering is almost opposite of intuitive. Circuits are logical.

After some years, decided he wanted to design apps.

Engineering and design seemed the opposite of each other.

If engineering was about designing the best car, design was about ‘why do you need a car in the first place?’

The intuitive, human side of design was completely new to him.

When time came for his thesis in grad school, wanted to study how intuition and logic came together in the real world.

Interviewed a a woman who was really good at her job who voiced worry about something she was working on. When asked why not voice that concern, she said: “I’m just a developer. I don’t have the data to back it up.”

Logic-driven left-brain culture was something he was really familiar with. Had to unlearn that to listen to his intuition.

We don’t give our subconscious mind the due it deserves. As humans, we intuit and feel first, and think and rationalise second.

As researchers, many are probably good at listening to our intuition, but can we help those we work with do so too?

Chances are, even if you are attuned to your intuitions, you probably don’t feel good talking about it.

Q: How did you make that transformation?

A: Didn’t even know about the word ethnography until three years ago. Became more empathetic with engineers and the challenges they face within an engineering culture. Sometimes it’s plain old listening.

Q: People who work across disciplines

A: I feel I am lucky to be able to jump around and try different things, but it comes with a lot of imposter syndrome and feeling like you don’t belong all the time.

Q: translating intuitions to actions

A: In retros, have peopel write down what they were feeling. These can often be traced back to specific bits of work in the project. So, use intution as as starting point. It helps people feel heard. We all have intuition but we also have to be evidence-based

Q: Whose intuition matters? How does power affect actions stemming from intuition?

A: Really good question. Everyone’s intution always matters. There are maybe things we can do to help balance out the differences in power in organisations.

Contextual breathing - opening up in a closed in world

April Jeffries · Ipsos

We all have ways of making sense of the world. Grew up on the insular streets of NY. worked in at weekends, I’m an artist

My bi-polarness is what helps me make sense of the world. I inhale to get close, and exhale to create spaces.

Similar dynamic happens in ethnographic research.

Balance between convergence and divergence.

Shallow breathing cannot support us. All it does is lead us to tribal connections that reinforce what we already know.

We often take shortcuts to avoid the mental effort. But we are becoming ill-equipped to handle differences.

We cannot become a society of shallow breathers.

Diagnosing the World Pulse

Christopher Golias · Independent

Acupuncturist’s fingers, vs. doctor’s stethoscope.

Analogous to:

Ethnographer vs. Data scientists

Both are diagnosing.

As researchers, data are our lifeblood, but also our subject’s blood.

What evidence do we use or discard? What is it like for remote participants to enter into a relationship with someone they will never meet? I’m blood brother to hundreds

Contemporary data collection practices are bleeding the world of its data.

Data and research as haruspex

When we mine data, who or what is being mined?

When we go to the data pool, what do we see? Things that were, things that are, and things that will be

To what end are we slicing and dicing people’s emotions and behaviour?

Are we healing societies or tearing them apart?

In the end, who can diagnose the heart? Researcher? forensics? lover?

The Organizational Context of Data

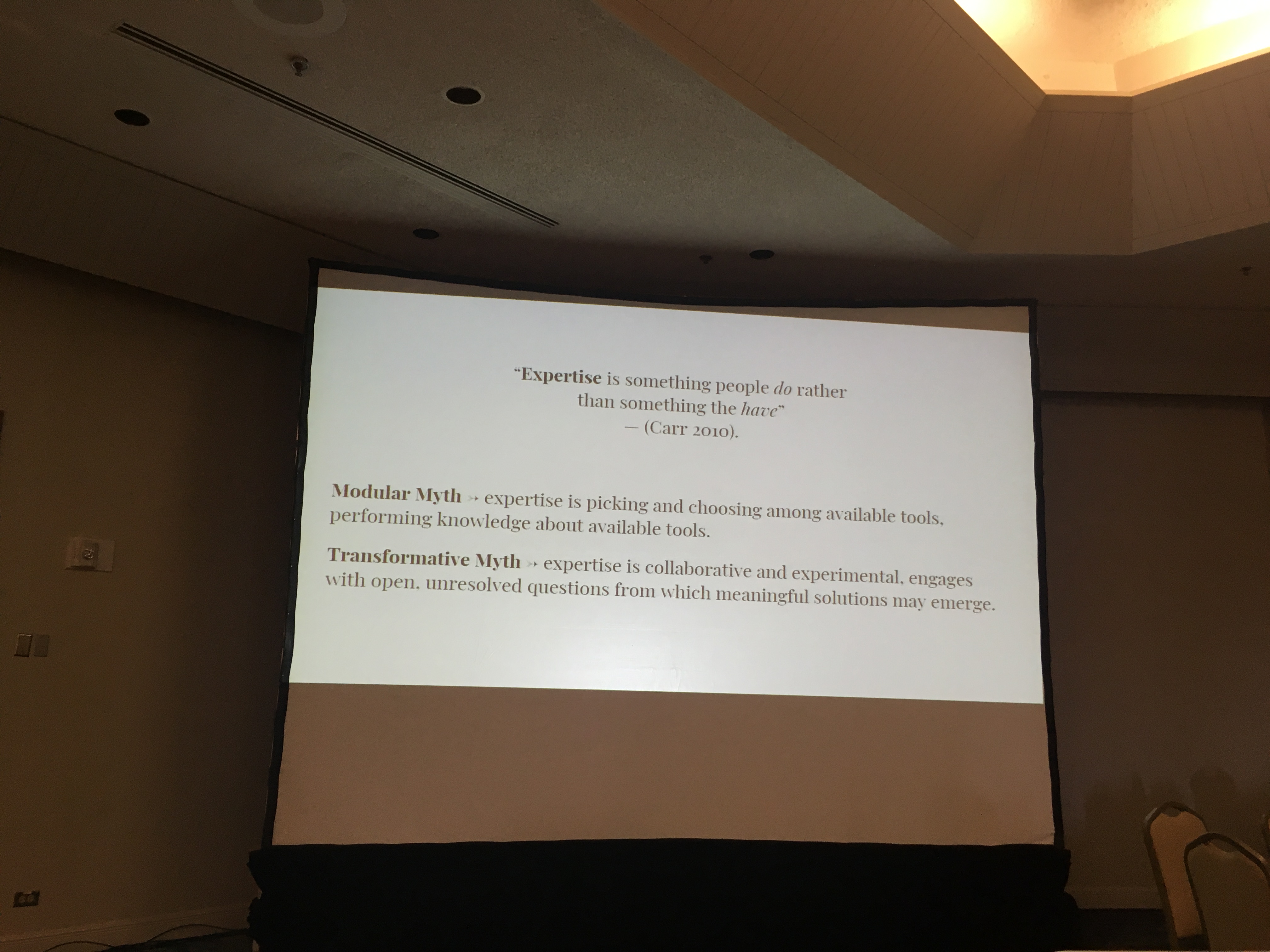

How Modes of Myth-Making Affect the Particulars of DS/ML Adoption in Industry

Emauel Moss · CUNY; Friederike Schuur · Fast Forward Labs

This paper is a reaction to

Helping an entertainment media company develop a data strategy:

But just weren’t communicating - what they said and what the client heard was really different.

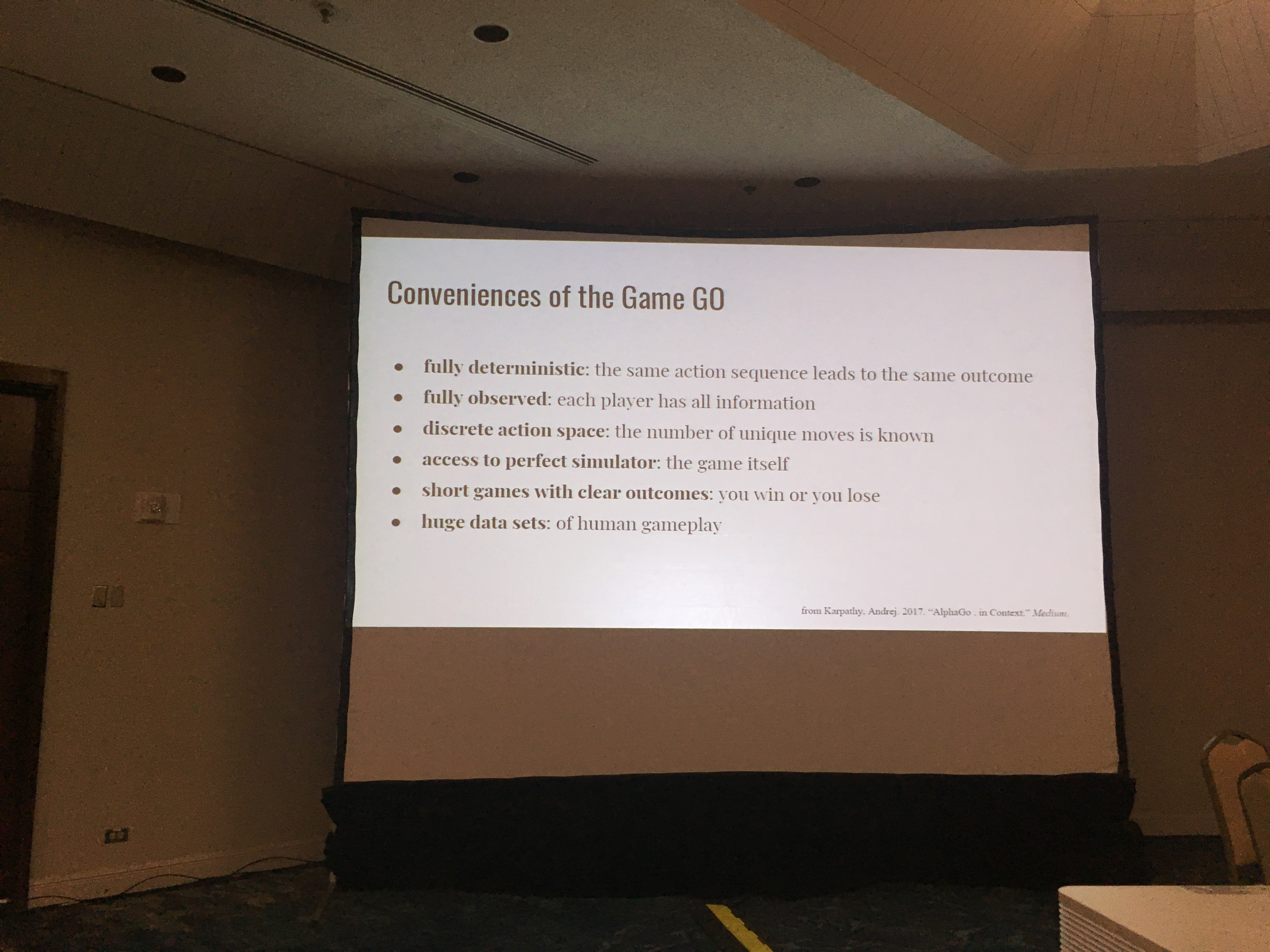

For data science and machine learning, one of spectacles that helped adoption was AlphaGo.

This led to myth that AI and machine learning must be ‘intelligent’ because Go is complicated, and therefore that AI and ML can do things of a similar level of complexity and difficulty as Go.

But those in the field knows that’s not true. The thing that the AlphaGo team did well was that they identified a hard problem that machines could solve by expoiting conveniences:

Consider electricity as an alternative metaphor for AI and machine learning.

In early days, nothing was standardised, from voltages to AC vs DC. The idea that investment in electrifying would pay off was not obvious at all.

DS/ML as an emerging capability is a story about complexity that requires dedicated expertise to construct new interfaces that mediate between the different needs of different parts of an organisation.

So back to case study - needed to learn how to crawl before run. For example, org needs to be clear and precise about what they are counting.

Machine Learning and Data Science as modular, vs ML/DS as emergent.

The framing matters because, for example:

Q: Local performances as performances

A: Spectacles are continually produced within the industry for itself (at data science conferences). We were also engaged in these perforamances when we went in to do these workshops. We showed these examples to deconstruct them. But what was interesting is how durable the myths are even when we deconstruct them.

Researchers are really comfortable with uncertainty but organisations (and especially engineering orgs) are not. The sublime overwhelms.

Acting on Analytics: Accuracy, Precision, Interpretation, and Performativity (Case Study)

Jeanette Blomberg, Aly Megahed & Ray Strong · IBM

Case study - Ins and Outs of doing data analytics in an organisation.

Background: Organisational practices produce digital traces, and the thinking is therefore that data analytics leads to better managed organizations

Problem: Org data are scattered in personal databases, making them costly to centralise.

Also: the meeaning of org data depends on work practices and policies and detective work (into context of data in the midst of org changes) for confidence in data.

Also requires: Organisational problesm framed as data problems - there’s a risk that this is the wrong framing.

In order to act on analytics, requires org actors to trust in analytics and have the authority to act.

Case study: IT infrastructure-as-a-service sellers

Participants: Cloud go-to-market strategy team, plus sales orgs, plus research team.

Data sourcing and cleansing requires Approvals, Aggregation, Entity resolution - a lot of work.

For this project, worked off of 3 years of revenue data + client registration data

Entity resolution: what do we call things, at what level do we name them. i.e. with global companies, should that be one company or multiple companies depending on geography? Or, per billing address? etc.

From there, developed metrics:

Risk of defection: over next six months, these clients are likely to defect

Growth and Shrinkage: Over next six months, likelihood of account growing or shrinking (same for offering)

One of the issues with the growth and shrinkage metric/algo was that it was hard to reason about. It found patterns that were unexpected, and it was hard to come up with a narrative that explained the results.

Trouble-shooting errant outcomes:

Some you can do without domain knowledge

Others were only possible with domain knowledge (entity resolution and offereing definition, for example). One challenge is that you can never be sure that you’ve found all the errant outcomes

Lessons learnt:

Trading precision and accuracy for interpretability.

Organisational realties include politics and organisational change.

Responsiveness to organisational change requires ongoing data science expertise.

There’s also maintenance debt: For these analytics to stay current they need to be continually cared for and evolved.

Ethnographic Theory and Practice

Designed for Care: Systems of Care and Measurement in the Work of Mobility

Erik Stayton (@onlyabot) & Melissa Cefkin (@melcef) · Nissan Research Center

Nissan devleoping autonomous vehicle. How would they operate and provide service to customers?

What would remote monitoring of autonomous vehicle look like?

Went to study buses. Why?

Because it’s an adjacent example of what happens in a supervisory system.

A key role in a bus system are dispatchers. There are many tasks that happen here that are not just about driving. What are these? How might they shift in an autonomous vehicle.

What was observed:

Interlocking set of roles and skills form an operational system that merges diferent perspectives and sources of information. But also systematic attention to features and qualties of service that go beyond operations (i.e. maintaining integrity of infrastructure, caring for passengers, etc)

They posited: A parallel intertwined system of care is fundamental to making mobility possible:

There’s process and relational work that happens at multiple scales for people and things alike

Questions for care-ful design:

-

Is the design investing in or eliminating skill and autonomy?

-

Is the design attending to where work will shift?

-

Will the design do the extra little bit to serve people well?

-

Are the right things being measured? (and leaving room for uncertainties)

-

Is the success of the design accountable ot unmeasured goods that it is hoped to produce?

Focusing on the first two of those questions:

Operator deal with the unexpected. Getting on a bus is not always simple. Field support anticipates and responds. Sometimes, road supervisors are not able to assist - here the dispatcher steps in. Operators make judgement calls about hte safety of themselves and others.

All of those involve skill and autonomy. Operators are responsible for keeping order. They are stewards of their domain. Informal connections and tools matter a lot. This flexibility is really important to the ability to respond to uncertain/unexpected events.

This shows that care is systemic - over different time scales and across different roles.

These multiple flexible ways of attending to buses and people help the system respond to things like mechanical failures.

We have to remember that care is fundamentally a process.

A lot of what we saw depended fundamentally on there being people in place empowered to make decisions to go above and beyond.

“Show me an autonomous system without people in it and I’ll show you a useless system”

Q: Where does the analogy break down? How much of this is predicated on the idea of this as a ‘system’

A: Part of what you get by naming it a system and thinking about it in that way is that you can see how all the individual pieces are enmeshed and work together.

If you are driving around on public infrastructure, you are still enageged in the system of public infrastructure. So, you can put it in a box and look at it in isolation, but when it actually happens in the real world those ways in which it interacts with systems or is situated within a system will still come through, so better to surface it from the beginning.

Screenplay, Novel, and Poem: The Value of Borrowing from Three Literary Genres to Frame our Thinking as We Gather, Analyze, and Elevate Evidence in Applied Ethnographic Work

Maria Cury & Michele Chang-McGrath · ReD Associates

What gives our work distinct value is that ethnography has unique ability to render deep understanding of human activities[?] in situ.

Applied ethnography continually draws insight from other disciplines and practices: dance, yoga, negative space drawings, etc

Rick Robinsn started the discussion about how literature can inform ethnographic work.

The value of fiction is ‘bringing life to life’

Challenges facing applied ethnography:

-

Framing research to obtain ‘thick’ data in the field

-

Making sense of data in teams and with clients

-

Making a convincing case with data in challenging environments to mobilize change

Why? Pressures to do work faster, cheaper. Lack of client understanding.

Literary genres can help!

Screenplay can help in research framing to obtain thicker data Novel helps in analysis to turn data into meaningful evidence Poetry helps in opportunities development phase to mobilise insight into action

What business does fiction (or literatrue more broadly) have in the ‘real people’ work that we do? Would it not undermine what we promise to deliver?

Yes there are some differences:

The aim of reading a book is often to choose to be moved, to feel and to experience beauty. Ethnography is about being systematic,

Similarities:

-

Are carefully crafted

-

Render a world

-

Reduce social reality to what is relevant

-

Seek higher truths

-

Require and evoke empathy

Learning from literature is especially relevant for applied ethnographers because: we must move beyond analysis to convince and persuade, we must incite eureka moments, we must imagine new futures,

Screenplay in a project’s research design or framing phrase / similarities with writing a field guide or protocol.

Where is the life? Screenplay could help:

-

imagine how fieldwork might happen

-

Plan ahead for an experience that encourages discovery

-

Be sensitive to how humans will interact

Using screenplay in this way could be a shortcut in place of a dry run. encourages you to think about: setting, props, dialogue, action, timing.

Q: visual storytelling vs textual

A: Starting point was with textual material. Think this approach can be generalised across different media/formats.

Automating Inequality: how high-tech tools profile, police and punish the poor

Virginia Eubanks (@poptechworks)

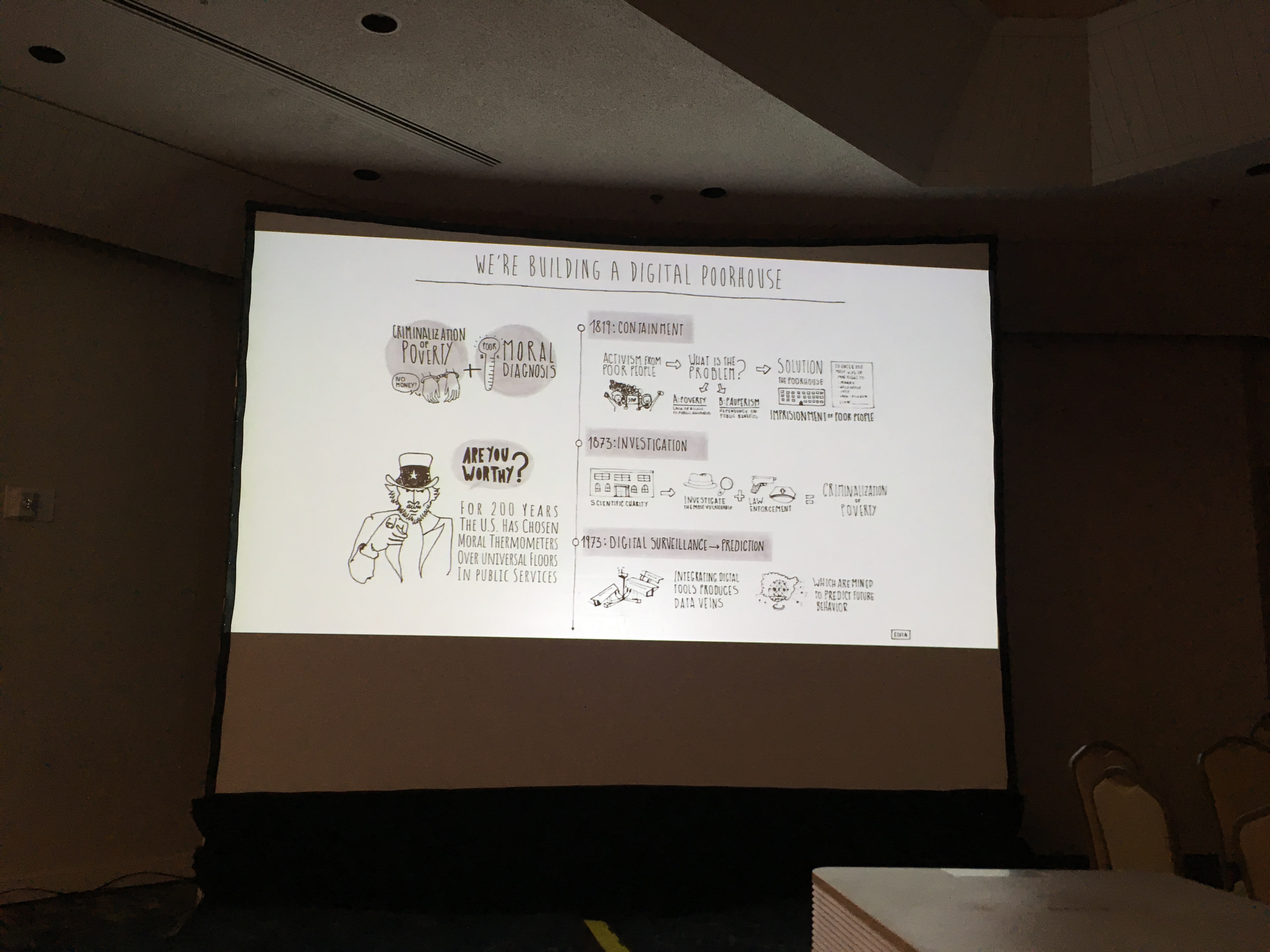

The big picture of what we are doing: While data-driven technology has the potential for good, what we are actually doing is building a digital poorhouse.

It perpetuates a narrative of austerity.

The digital tools that we find in public services are more evolution than revolution. It goes back to the 1820s (and before)

After a huge economic dislocation in 1819, economic elites became scared of activism from the poor. Comissioned a study. Was the cause poverty, or pauperism (dependency on public benefits)? study concluded that it was pauperism.

Solution then became the poorhouse - imprisonment and giving up rights in return for benefits. Death rates in poorhouse were as high as 30% annually.

How did these tools (high tech tools for measuring effectiveness of programmes, establish eligibility, etc) become popular at this specific time?

They promise to address bias but actually hides it. They create an empathy override.

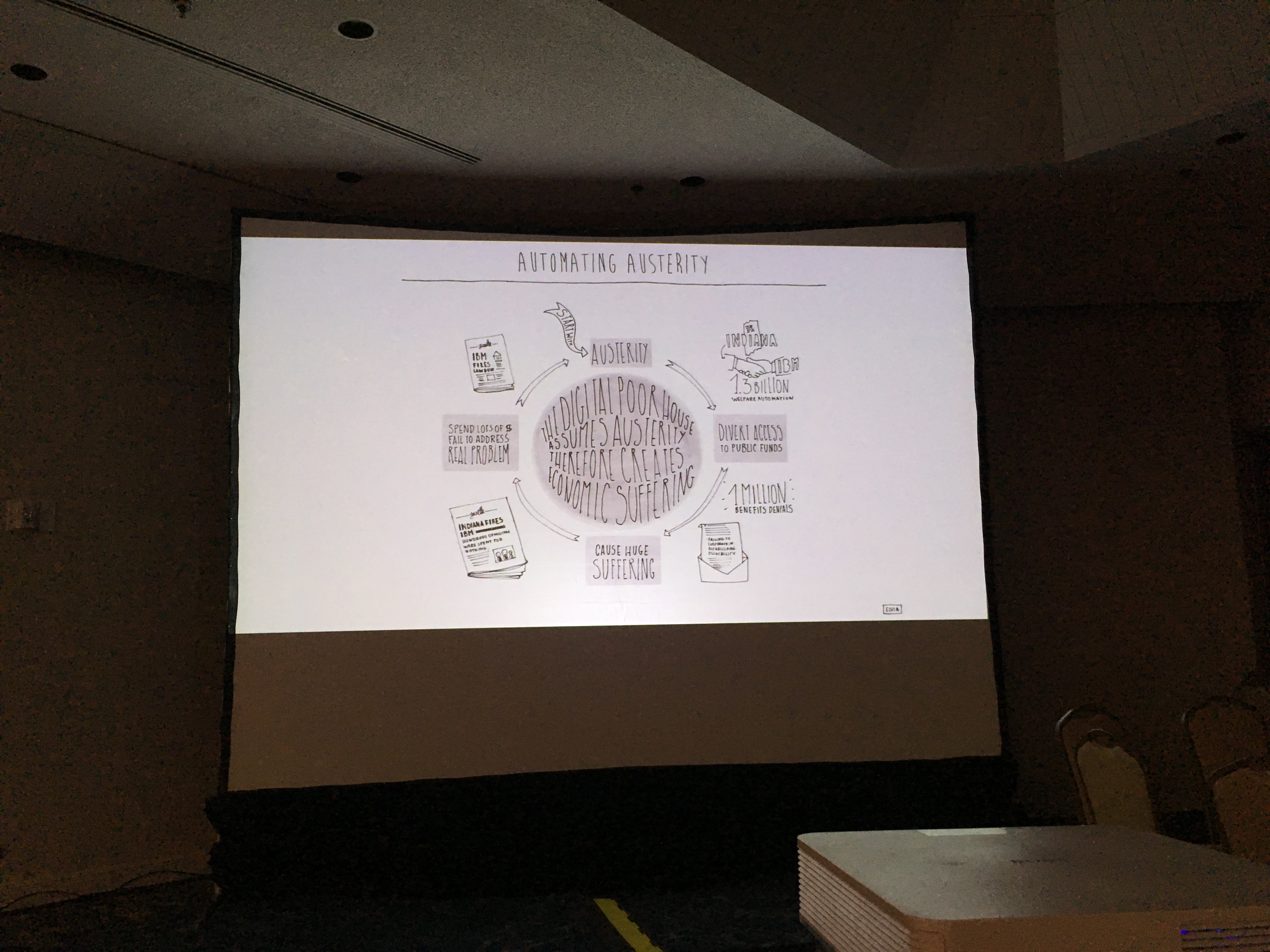

Automating austerity:

A million benefit denials - ‘failure to co-operate’ taht most of them just meant someone had made a mistake in the process. But move to remote cost centres meant it was really difficult to hold the state accountable. Burden to find what’s wrong and fix it fell to the applicant (instead of the state)

Omega Young of Evansville - missed an appointment for medicaid because she was in hospital for cancer. She was emaciated and hospitalised and she called to notify that she couldn’t make the phone appointment, but her food stamps and benefits were still cut off.

She died March 1, 2009. On the next day, March 2, she won an appeal for wrongful termination and had her benefits restored.

Reducing casework to a task-based system was profoundly dehumanising. Before automation we listened to what they had to say and acted on it.

“We became slaves to the task system.” That’s the job of the case-worker, to help people wade through a complicated system. Rules became brittle.

It cost the state as well: 8 years in court.

System in Allegheny county in Pittsburgh to find vulnerable children.

Patrick and Angel - working class parents who have racked up lots of interactions with the youth system that created a risk profile that they were uncomfortable with.

Each interaction was entered into the family’s case file even if investigation found nothing wrong in the end.

The system has 1bn records, but doesn’t collect data unevenly - only when a parent access public services. Result: over-predicts in low-income family, and under-predicts in professional middle class families.

low-income parents worry about false positives; case workers worry about false negatives

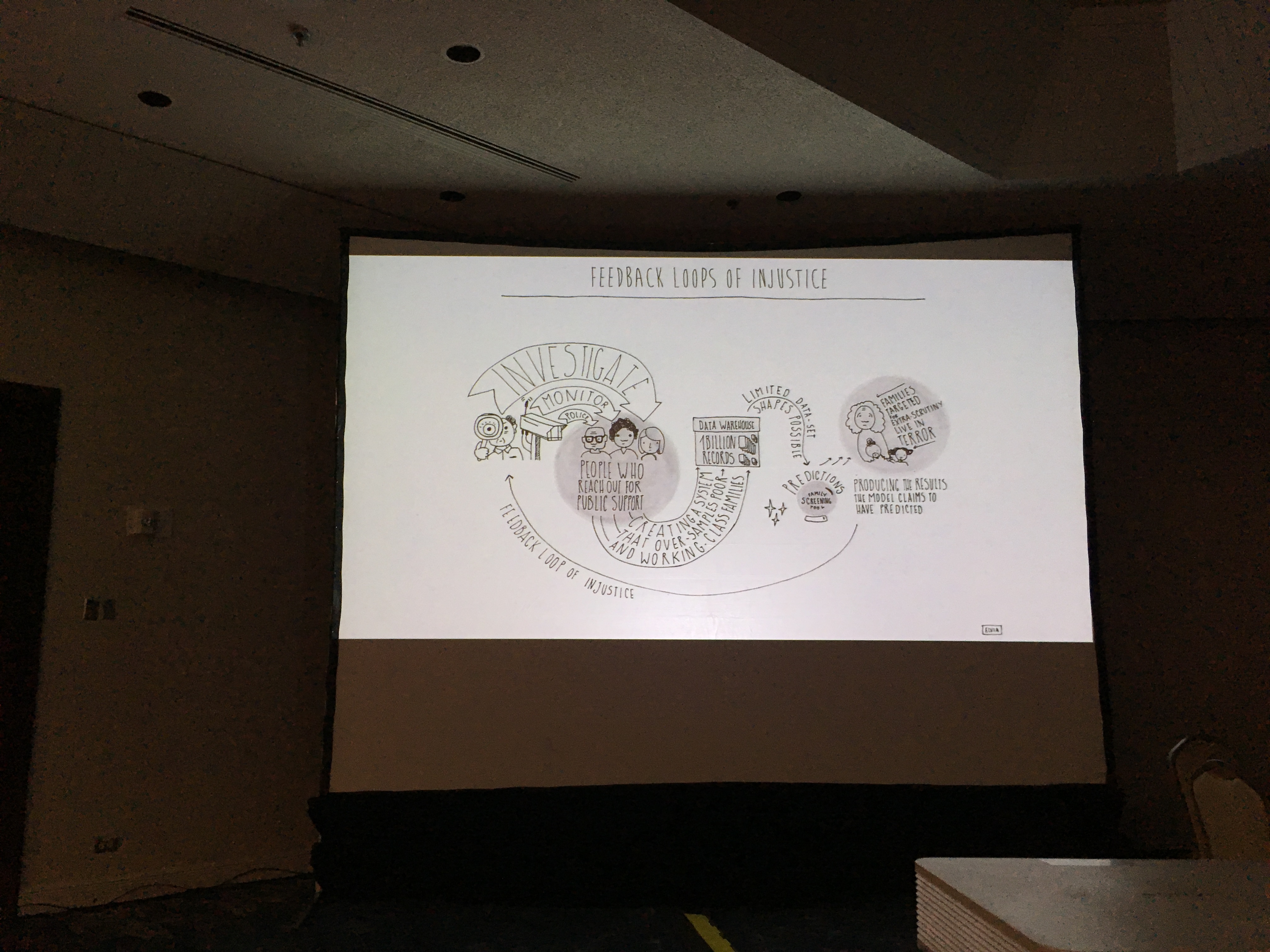

[This feedback loop is basically what Cathy O’neil writes about in ‘Weapons of Math Destruction’]

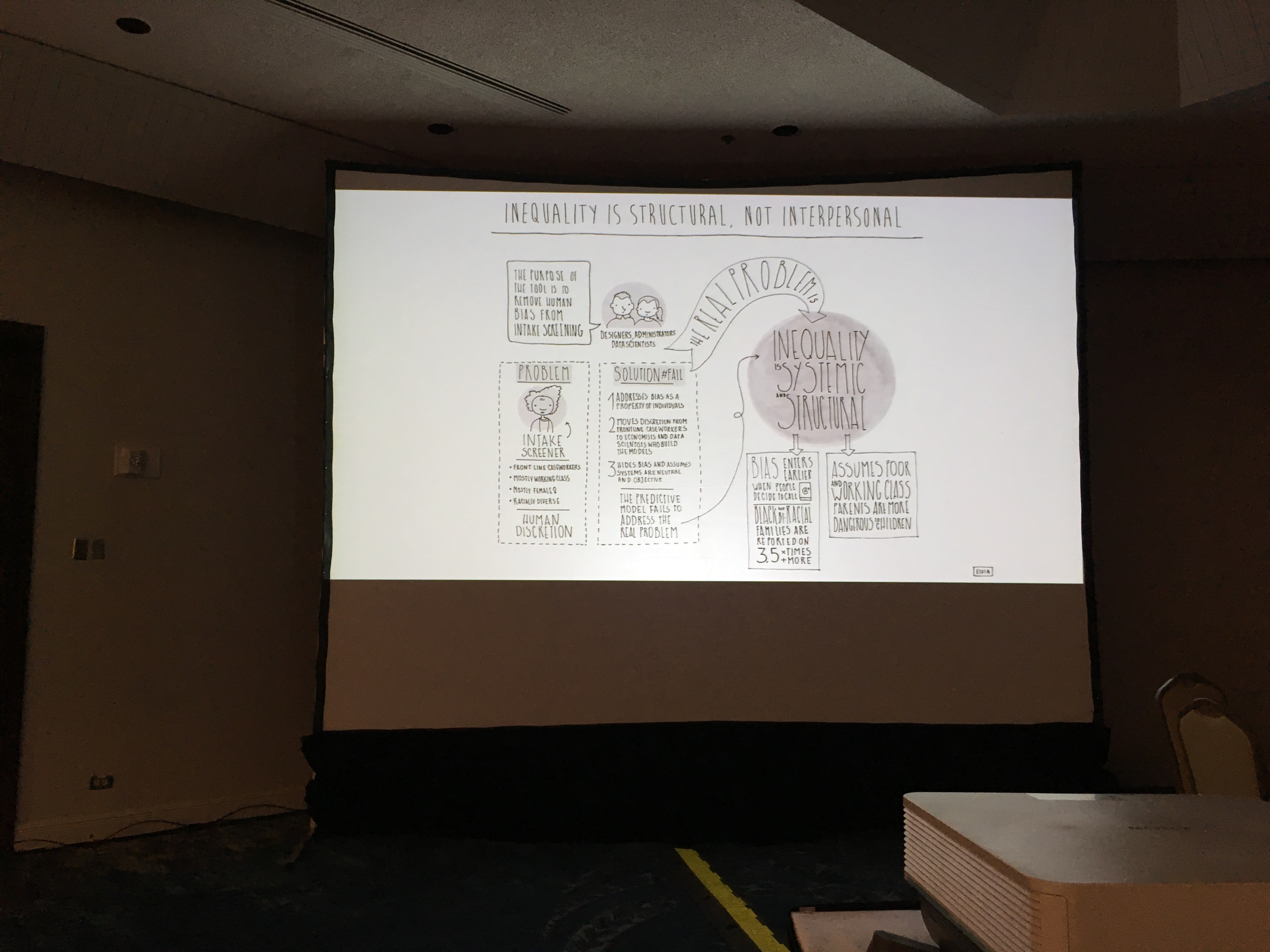

The model’s designers were clear that the purpose was to root out bias (which comes from/happens when discretion happens)

“Discretion is like energy - never created or destroyed, just moved.”

This moves it from the front-line case workers to data scientists and designers - they see bias as individual (rather than systemic)

In this case designers actually did a lot of what critics of algorithmic ssytems adviced: were (mostly) transparent, were accountable, participated in human-centered design.

Inequality is structural, not interpersonal:

By limiting front-line workers’ discretion, it hampers their ability to correct for bias.

Whose discretion do we trust? who has the right to use their clinical knowledge and whose evidence matters?

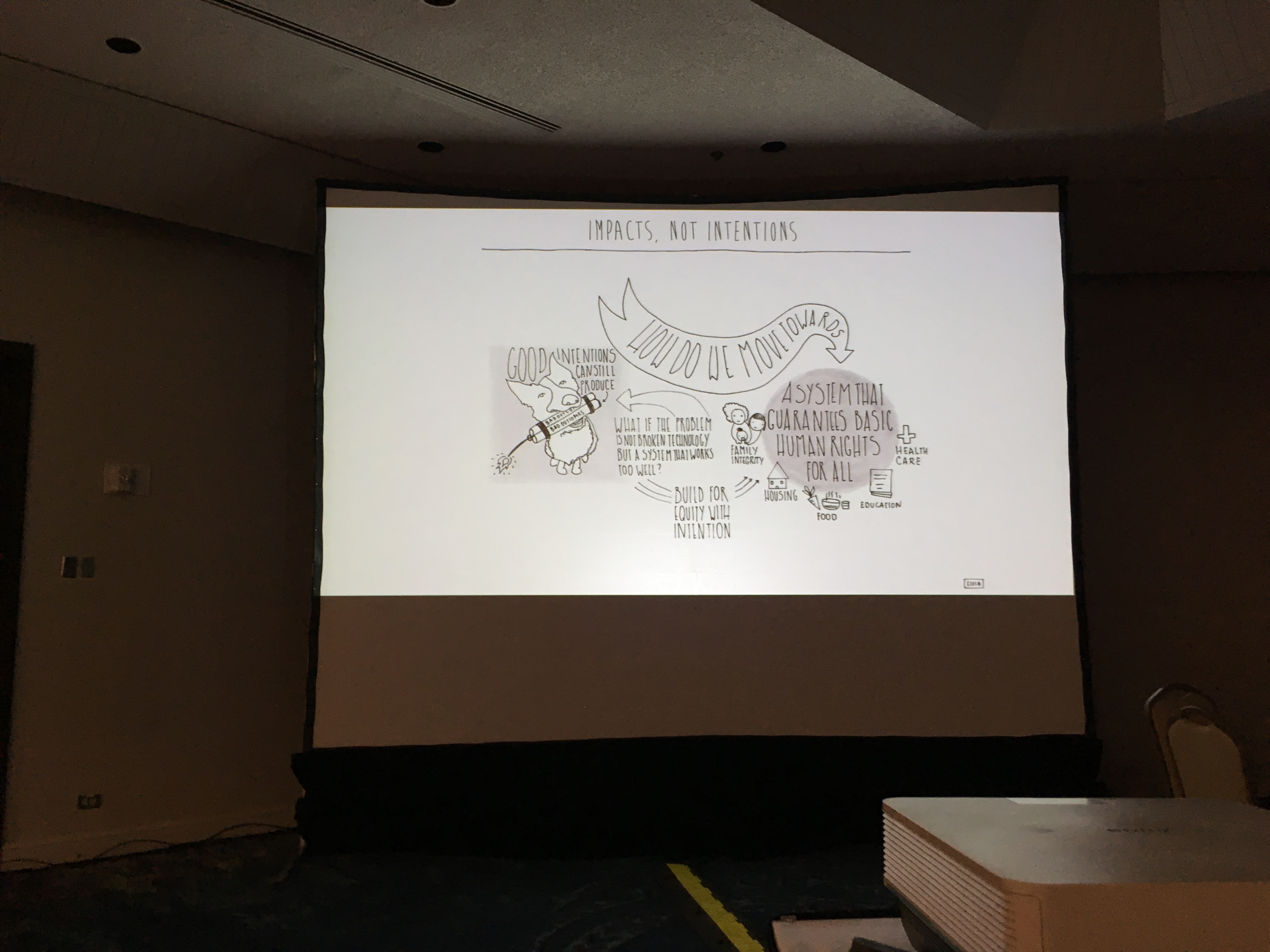

Focus on impacts, not intentions:

What if the problem is not broken system but systems that carry out the deep social programming inside us? What if they are doing their job too well rather than too poorly?

Many of these are presented as systems necessary to triage at scale. But what about the decision and assumptions behind the need to triage in the first place? We are automating rationing and operating within false limits.

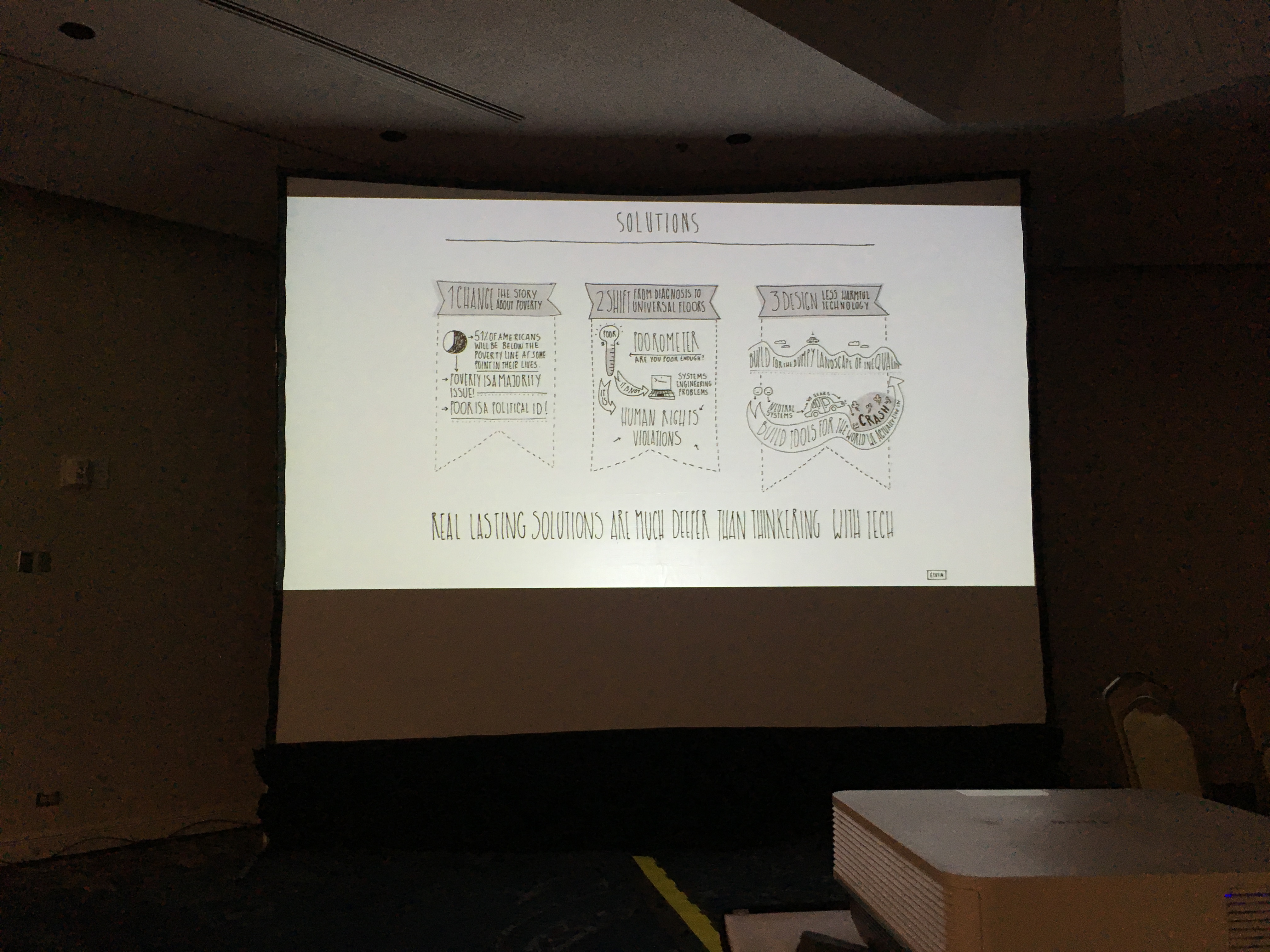

3 kinds of work needs to happen at the same time:

-

Change the story of poverty. It is not an aberration in the US. 1/2 of americans will fall below poverty line at some point in their life. 1/3 will access means-tested benefits.

-

Shift from diagnosis to universal floors. Not systems engineering problems, but human right violations.

-

Design less harmful technology.

Designing objective systems just means designing for the status quo.