Epic 2018 day 3 conference notes

What’s Fair in a Data-Mediated World?

Elizabeth Churchill, Astrid Countee, Nathan Good, Miriam Lueck Avery

This panel will discuss questions of fairness and justice in data-centric systems. While the many social problems caused by data-centric systems are well known, what options are available to us to make things better? Chair Elizabeth Churchill will draw the panelists and audience into conversation about making change on many levels, in our daily work as well as larger-scale collaborations.

What’s fair?

- in accordance with rules or statndards; legitimate

- without cheating

- without trying to achieve (or achieving) unjust advantage

Intended and unintended data baises:

- Activity bias

- Data bias

- Sampling bias

- Algorithmic bias

- Interaction bias

- Self-selection bias

- Second order bias

- Interpretation bias

What is evidence and what do we treat as evidence?

ACM code of ethics include:

2.6 Perform work only inareas of competence

ResponsibleCS.

Nathan Good

“We care deeply” language in disclaimers. We can’t say just that anymore; it’s become meaningless.

But ‘privacy’ and ‘fairness’ mean different things to different people. [Asks audience, who shouts out:]

- compliance

- personal safety

- ownership

- transparency

It’s not about compliance:

“Every one of your users experiences the UX, no one reads the privacy policy.” - Woody Hartzog

Functions that traditionally belong to compliance officers are now shifting to UX. This is a good thing

GDPR very much based around human rights.

Hoxton case study around retail analytics that track how shoppers move around a mall. Instead of tracking and showing everything about the person, only show the feet - most clients only care about the aggregate anyways.

Astrid Countee (@ianthro)s

Data for democracy. Became more interested in technology when found that she didn’t have access to the team doing work that she was interested in. Became aware of need for the work that ethnographers and anthropologists do - and how more of them need to not just be in a consultative role but be in the room when things get built, and be at the table when decisions get made.

A lot of technologists are aware that they don’t have the human insight skills, but they also think that no one else has those skills, [in part] because we’re not loud enough about what we do.

Miriam Avery

Works at Mozilla. Was at Institute of the Future.

Most pragmatic resource: Lean Data Practices

Respect is a pre-requisite for trust. Thinking about what we collect and when we collect it is fundamental.

Provocation: As researchers, I believe that you collect qual and quant data in respectful ways. [However] As researchers in large powerful companies with monopolistic tendancies, the telemtery data that you use to triangulate your data is collected in a coercive way.

Questions and Opinions

Miriam: mentions case of Roomba collecting spatial data about people’s home. Despite this, consumer uptake of Roomba did not diminish. But the example became a case study in advocacy for GDPR regulation, so it all comes back.

Q: I think it’s disrupting the team when you are raising all these risks, and it’s demoralising the team

Astrid: To shy away from talking about risk is to invite failure. If my job is to make the product better then I’m doing my job when raising these issues. It’s important that we as a team can disagree.

Q: What about evidence of all the risk you talk about?

Astrid: We don’t have evidence of all the great things the product will do anyways.

Miriam: Also need to stand up for each other - we cannot fight this alone.

Q: I love shopping, but this whole anonymous thing is not going to give me a discount.

Nathan: Actually given the quality of the data we are probably going to give you the wrong discount anyways.

Q: Transparency

Nathan: Materialisation is a better phrase than transparency.

Q: Standards committee - why is doing this stuff useful to the company when it takes time away from your day job?

Astrid: It has actually become easier to justify this recently given some of the blow ups. It’s important that individuals in the company feels capable of acting without backlash [so they can do work in advance to prevent disasters from happening].

Q: What’s the use of standards and codes if there are no consequences?

Elizabeth: yes, consequences matter, but there also has to be a starting point.

Q: Whose responsibility is it to get researchers in the room?

Astrid: It should be the employer, but often this doesn’t happen. So I had to learn what they do and how they talk so I can push back and fight to be in the room. Not necessarily that everyone needs to learn how to code and do data science, but to at least speak the language and not get excluded because of jargon.

Nathan: Examples are helpful. Some places just suck.

Elizabeth: The community can also help each other and provide perspective of what good and respectful looks like. Some places suck but many people don’t suck - researchers can use empathy to reach out more too.

Comment from the floor: Being approached by researchers

Comment from the floor: Mutual suspicion (between data science / antrho) starts at university level

Comment from the floor: Hegemony of the techie.

Comment from the floor: Manager and executives often don’t know much about either qualitative OR quantitatve research.

Possibilities and Limitations for Moving Forward

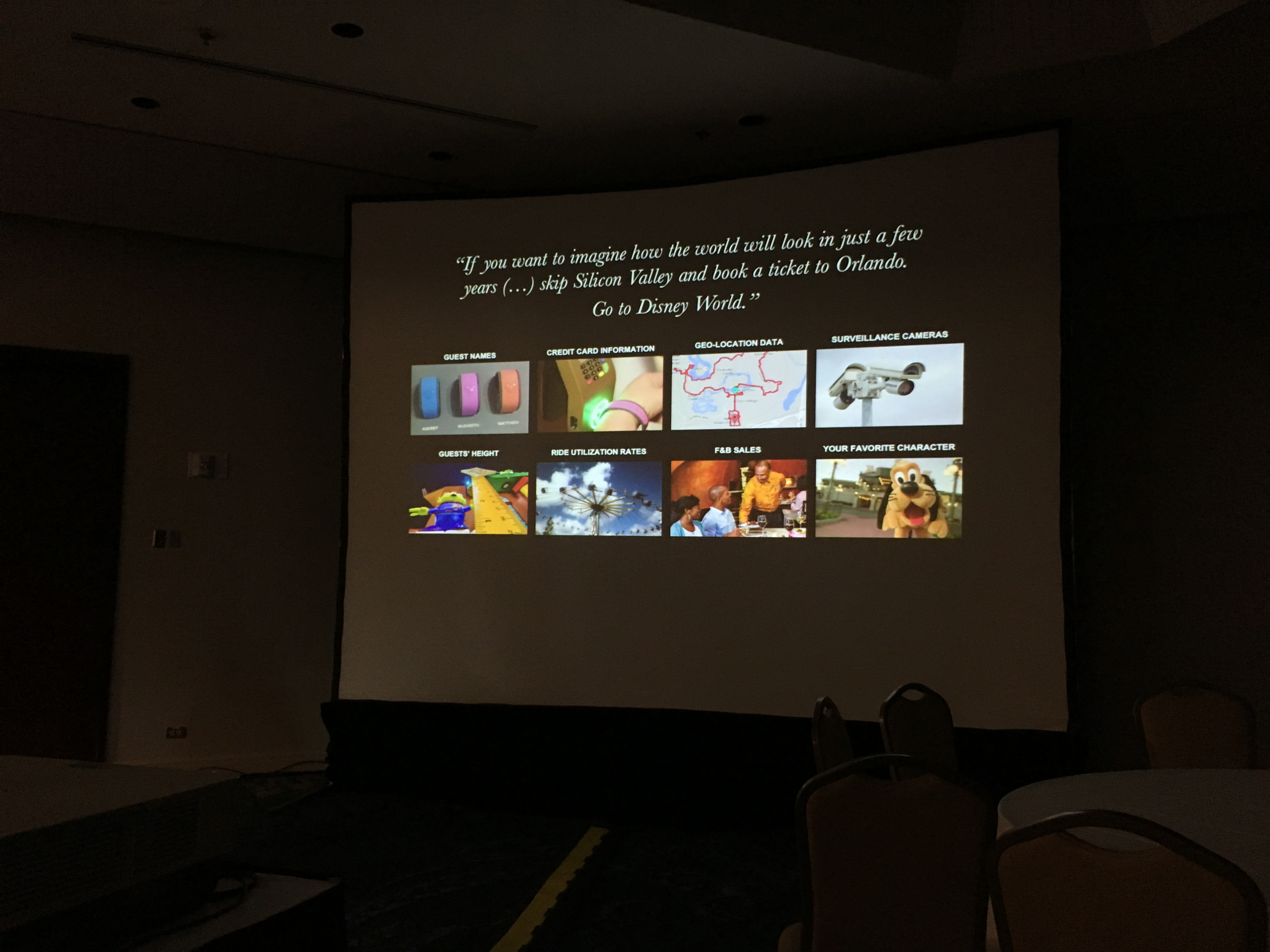

Below the surface of the data lake: An ethnogrpahic case-study of the detrimental effect of big data path dependency at a UAE Theme Park

Jacob Wachmann, Andreas Juni, Dave Baiocchi, William Welser · ReD Associates

Theme parks as el dorado for data scientists because so much data gets collected.

And also theme parks as indicators of the future.

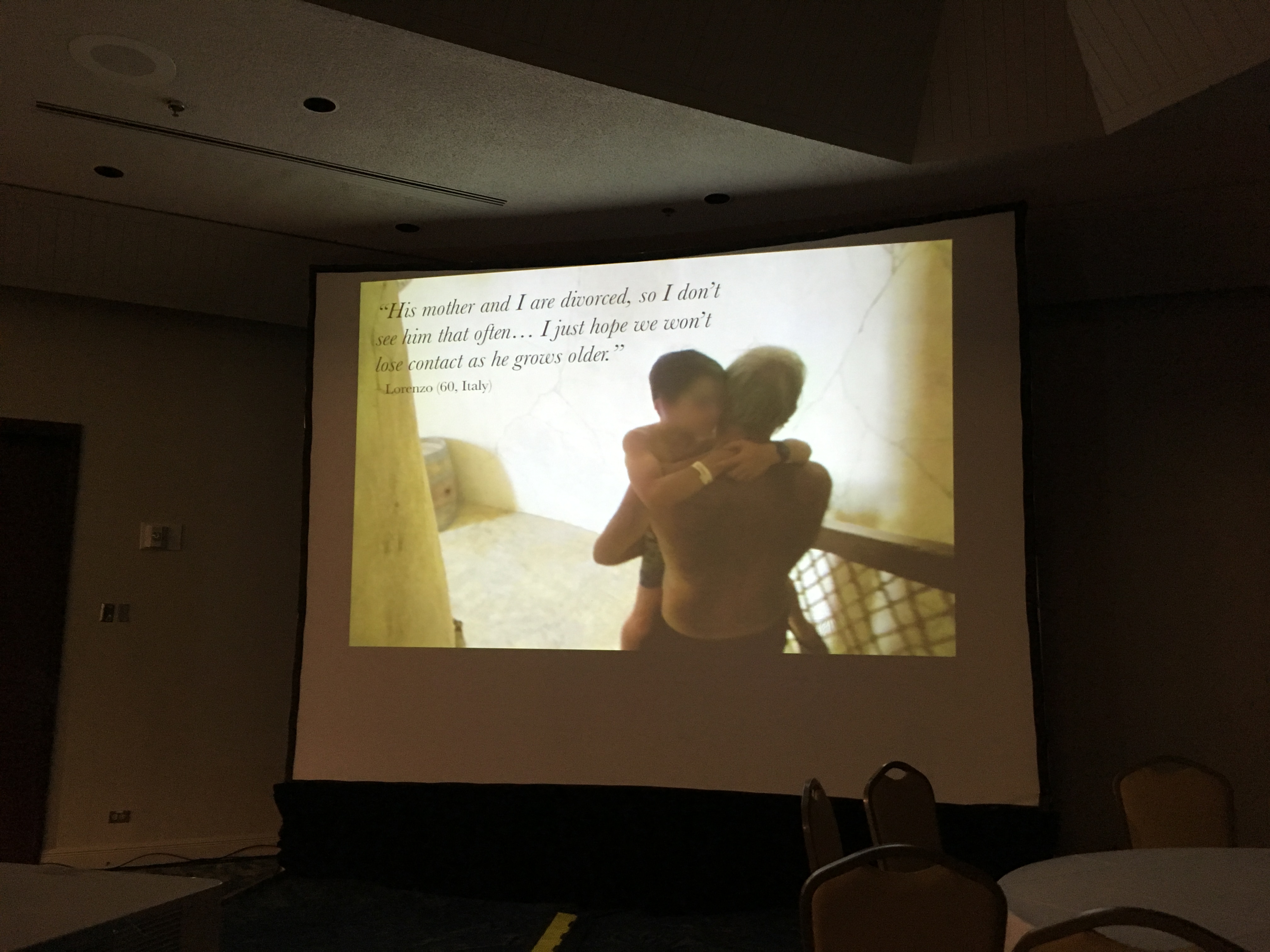

But what really is at the heart of theme parks? This sense of bonding.

How to measure ‘bonding’? Started off with some KPIs but they were deficient.

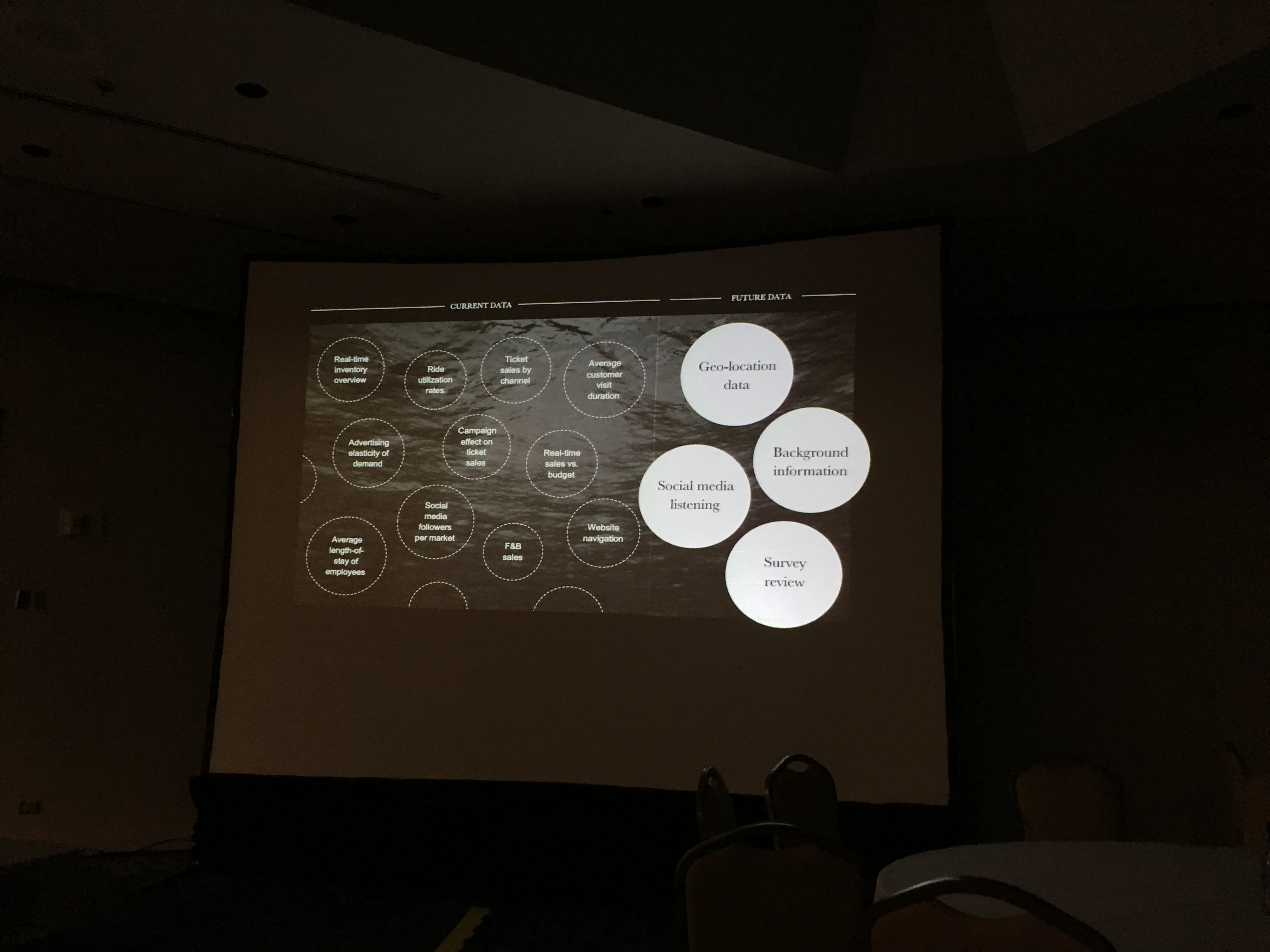

Because, when looked deeper, realised that the data being collected were about the business rather than the customer.

So - we could not build the bonding index using data they have today. You need new data

The problem is that there often isn’t an assessment of what data we need to collect. They are just interested in: we have this data lake what can we get from it?

Just Add Water: How Mixing Qualitative Methods of Design Research with Human-Centered Data Science Combined to Deliver Software to Improve Customer Service

Ovetta Sampson (@writingprincess) · DePaul University

Data sciendists and design researchers and enivronmental scientists and design directors all working together from beginning to end.

The call center industry service problems:

80% of people prefer self-service. But when they call someone, they really want to talk to someone, but they often don’t get to do so.

CEO of cruise company made some undercover customer calls to his own customer service, and it was frustrating. So he asked Ideo to help fix it.

Treated data as a stakeholder and interviewed it.

“When you have conversations about which data to include and omit, you can build a tool that does not compromise the user’s privacy.”

“Data science methods boost people’s ability to do what they do better.”

Hired second city actors to act as customers, but gave them real data of customers as basis for their acting.

“Data and Ethnography share equal billing in project outcomes.”

‘Look for the information flow’ - where is information stored? In on-site visits they found informal information storage methods (like own notebooks, etc) because they didn’t trust the system.

In the end, it was all about the pain and frustration of people not being recognised for who they are.

Did 2-week prototype of the tool. Ethnography also led to some changes in org structure - everyone who called their service initially reached a sales agent. Also had to change incentive structure.

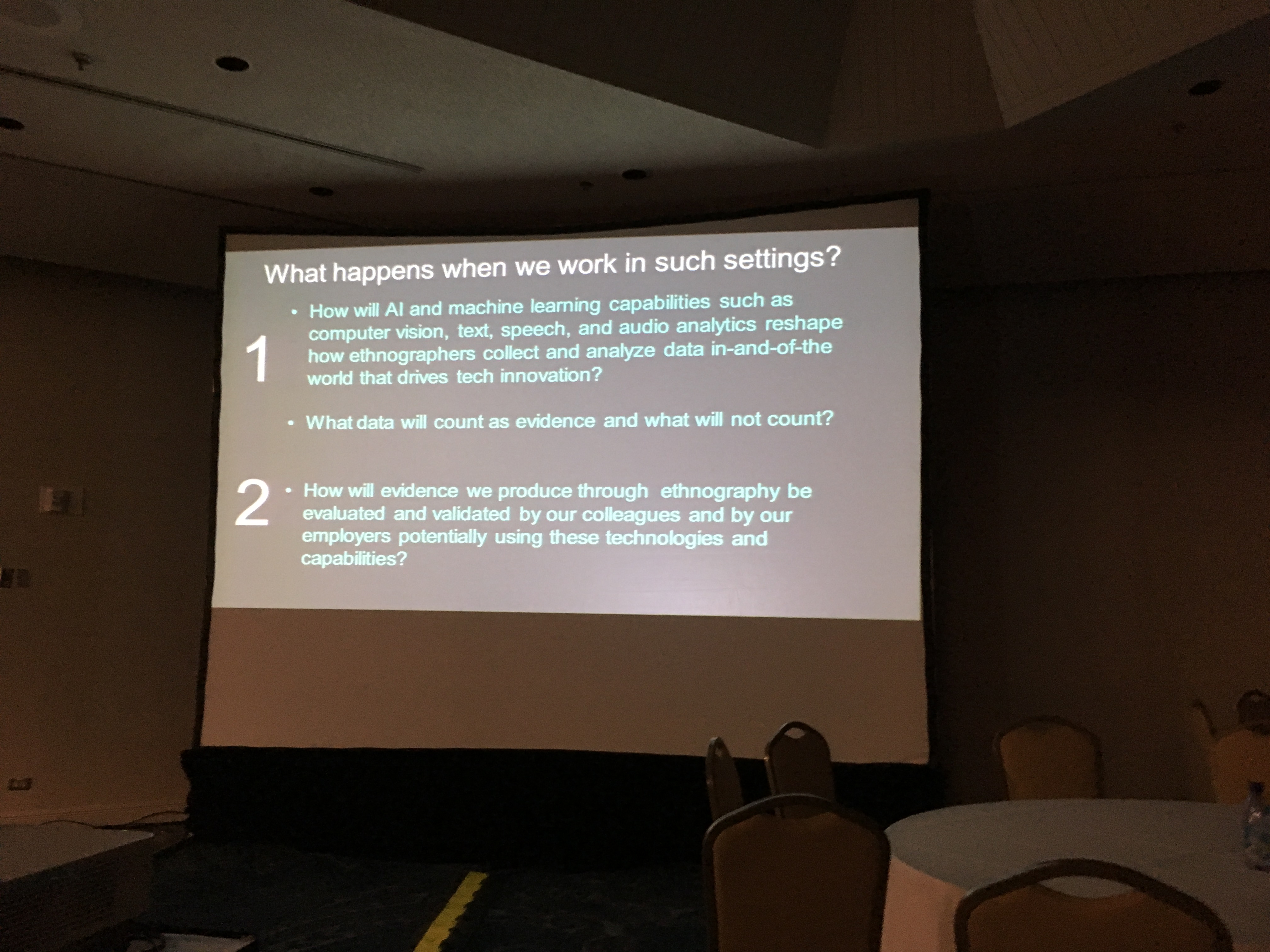

Scale, Nuance, and New Expectations in Ethnographic Observation and Interpretation

Alexandra C. Zafiroglu & Yen-Ning Chang · Intel Corporation

Imagine new tools for ethnographers in next 20 years.

Stories tech industry tells itself about innovation and data use:

Evidence has shifted form being about people and their experiences, to the digital traces created (at volume/scale) by people - the human subject becomes invisible.

Future tools can enhance ethnographers’ abilities:

For example - in running video and audio through ML, can emphasise non-speech sounds that we didn’t notice.

Overall, machines can help extend the scale at which we analyse data and increase the nuance in our interpretation

[Interesting issue raised here: As technology changes, how much and which parts of the research process needs to happen synchronously and in an embodied way by the researcher, and how much of it can be offloaded to machines to be done asynchronously?]

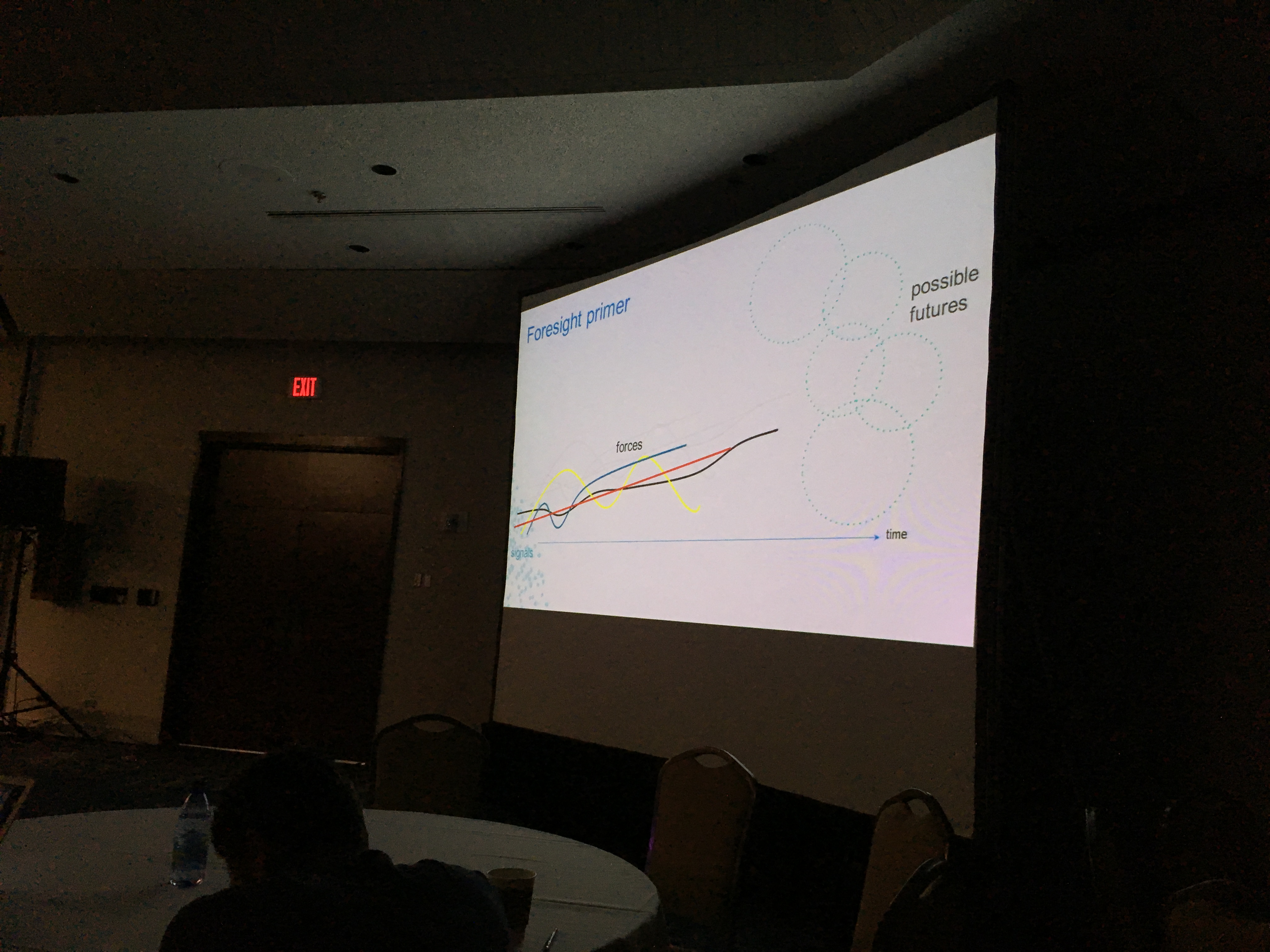

The Future is Yours

Donna Flynn (@donnakflynn)

Donna Flynn is Vice President of WorkSpace Futures and Market Insights at Steelcase. She leads a global team of researchers that delve into wicked problems around the future of work and translate those insights to inform the design of strategies, products, and services

We are at a crossroads of change. With the rise of digitised data, where is the human?

What unites us: Human empathy is a fundamental lens we bring to our work. It’s our third eye.

What unites us: We want to create change.

I’m standing on this stage as a business leader.

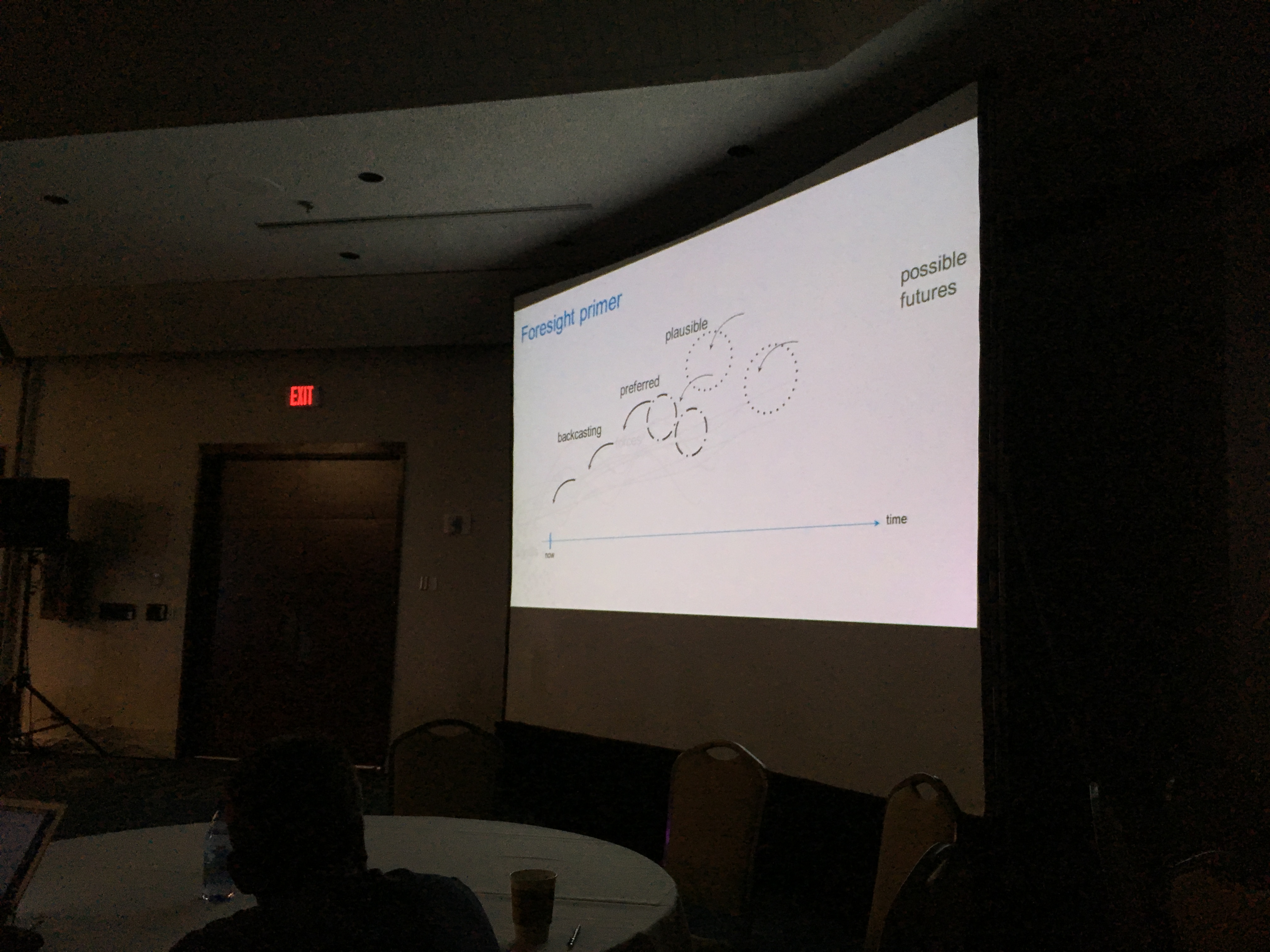

Imagine the future of EPIC using foresight - which offers a way to bridge design thinking with business strategy.

What are the forces of change?

-

Digital Transformation

-

Augmented intelligence

-

Purpose-driven work and workers

-

Humanised Performance

(Physical space shapes behaviour. Behaviour and values over time create culture.)

Possible futures:

-

A future where big data trumps all

-

A future where everyone needs to analyse interpret and act on data

-

A future where machines will do ethnography

-

A future where some producers are as valuable as the product

There’s going to be an increasing divide between highly specialised/valued jobs and others. There’s going to be a fundamental shift between the employer and the employee for those jobs. Employees are bringing new demands and new voices to the workplace.

The value of data will be in its meaning. Not just about the customer and the user but in terms of business value and ability to translate it into action and strategy. Skill as storytellers will be a huge boost for this

Human-centered expertise will be valued equally to engeinering- or business-centered expertise.

A human renaissance will flourish in a world of data-driven everything.

We must immerse with our data partners.

We must wield our superpowers as systems thinkers.

We must nurture our communities of practice: diversity makes us stronger, and we thrive on human connection.

We need to get comfortable with the concept of value creation. When we can speak the shared language of business, our ability to create change magnifies.

We need to learn to lead. You need to create change at a systems level, and that requires different ways of leading. It’s easier to create change in your tribe than at the level of the nation, and these companies are at the level of a nation. You can champion your point of view but those POVs need to be informed by active listening to others. You need be strategic about your networks of influence. (being strategic mostly just means having lots of lunches and coffees)